| Hi all, I am writing to both JGit and Gerrit mailing list because the problem could be of interest for both communities.

I am able to systematically reproduce a fatal memory leak that causes the complete collapse of the JVM.

Repos: the Android repos Test-case: for (all repos); do clone and git GC; done

P.S. The traffic is serialised so that we have the certainty that threads are done with their jobs when the next operation starts.

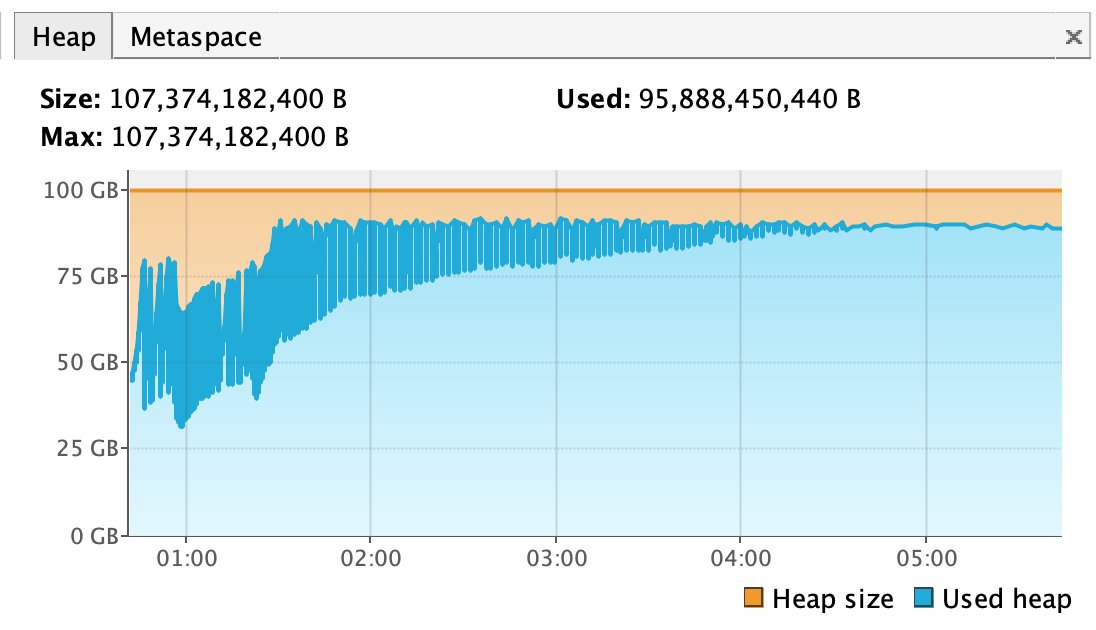

The scenario is quite simple: just generate a constant traffic of continuous clones and JGit GC and, after a few hours, the JVM id completely dead. By using a G1GC and tuning it, I managed to avoid almost all the STW GC cycles. However, the memory utilised increases continuously and at the end al the GC goes into an infinite loop trying to release something without being able to do so.

Sample of the GC log when the JVM is collapsed: 2019-06-11T08:15:27.340+0200: 28506.935: [Full GC (Allocation Failure) 90G->89G(100G), 106.4610300 secs] [Eden: 0.0B(35.0G)->0.0B(35.0G) Survivors: 0.0B->0.0B Heap: 90.5G(100.0G)->89.2G(100.0G)], [Metaspace: 61559K->61559K(65536K)]

And the JVM heap utilization:

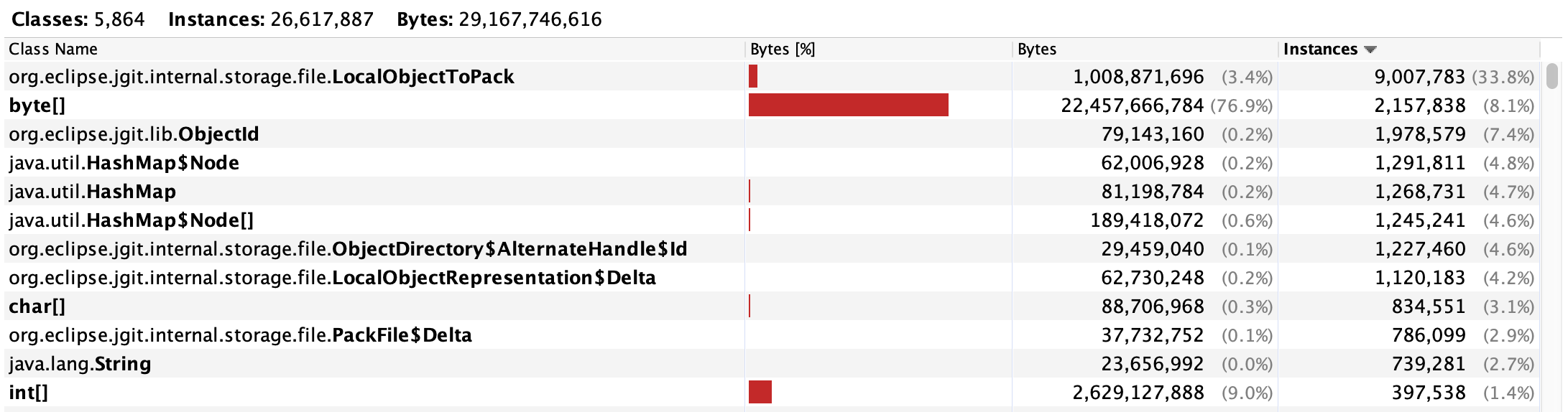

With regards to the memory dump, see the following top offenders:

I have explicitly set low limits for caching packfiles in the gerrit.config:

[core] packedGitLimit = 10m packedGitOpenFiles = 16000 packedGitWindowSize = 64k

In theory, JGit shouldn't hold huge amount of memory for packfiles and instead continuously read them from the filesystem. However, as you can see from the above figures, we have over 2M byte arrays holding over 22GBytes of memory.

I also have a ~/.gitconfig with a specific [pack] configuration:

[pack] maxDeltaDepth=5 deltaSearchWindowSize=10 deltaSearchMemoryLimit=0 deltaCacheSize=52428800 deltaCacheLimit=100 compressionLevel=-1 indexVersion=2 bigFileThreshold=52428800 threads=1 reuseDeltas=true reuseObjects=true deltaCompress=false buildBitmaps=true

What sounds strange to me is the high number of LocalObjectToPack that are held in memory (9M of objects): the ObjectToPack should be only a temporary object used by a PackWriter, but I am not a super-expert of that part of code.

Rings any bell to anyone?

Thanks for the feedback. Luca.

|