Hi,

Hiho,

Thank you for your feedback! My comments inline..

I would like to propose an

improvement:

I think the "user feedback and usage" is a major key point of

the maturity evaluation of a project. You can have a project

with a very nice code, a good responsiveness of its support, a

good process for its developers, but a poor maturity from the

point of view of the end users but features are useless,

ergonomic is bad, etc.

First, I propose to add a 4th category in the quality model [2]:

community is mostly focus on the creator of the technology and

those who help the project to be adopted. So, in my opinion, it

should not be consolidated in the same category.

Lets call it for the rest of my email "Usage".

+1 -- sounds good.

In this Usage category, lets move

"installed base". And in this one, you propose an installation

survey.

+1

You should also add a metric about

the number of download. For me, a project which is not used

cannot be promoted as Mature.

Well, let's have a word about metrics.

One big point with the process is that *all* metrics needed to

compute the quality attributes must be *reliably* available for all

projects. If one project doesn't provide, say a maturity evaluation

or whatever, and another takes the time to measure it and has bad

results. In such a case the former should not have a better notation

than the latter, because it would encourage projects not to measure

things they need to improve. This holds for many metrics, especially

when they may not have the same meaning from one case to the other

and when they cannot be collected automatically.

You should be able to calculate this

metric from statistics of EF infrastructure (apache logs on

updatesite, marketplace metrics, downloads of bundles when the

project is already bundled, etc). Be careful to remove from the

log stats any hudson/jenkins which consume updatesite: they

don't reflect a real number of users.

As for the downloads, they sure have a cool information but we have

to be careful with its consistency across projects: some components

may use different means to install or download, as highlighted by

Raphael in a later email. However I like the idea of measuring it

through different means (logs, marketplace, dl of bundles..) which

helps counteract some side effects.

Something interesting should be also to measure the diversity of

the users. For example, if a project is used only by academic or

by only one large company, can we say it is mature?

Interesting idea, although very difficult to measure accurately.. we

do not have a sure mapping between the users and their fields.

My next major proposition is allow

user to be precise about their feedback on the project, in a

view to allow you to use it automatically in the maturity

assessment.

My idea is reuse the existing Eclipse Marketplace to host this

feedback. We can reuse ideas from other marketplace like Play

Store or AppStore where users can write a review but also add a

notation.

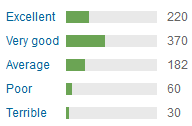

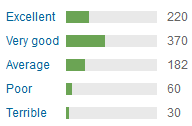

So, you will have this kind of result :

To be more precise on the vote, we can use two alternatives:

propose a fix list of criteria, or allow free list of pro/cons.

The first option is easier to tool-up, but the second one is

more useful for the project commiters (mostly to know what users

like, which is an information very hard to find today).

Very interesting. As for the last paragraph, we can use this

information even if it does not come into the notation itself: we

also aim to propose sound and pragmatic advice to improve the

project, and your proposition definitely fits in it.

For this second one, I see a website that uses it (I can't

remember which one) and it was like this :

You have two lists to fill where users can freely add new line.

Lets use this example for only one feedback):

- what do you like

performance

nice icons

-what do you dislike

no linux compatibility

And, you just have to create a simple analyzer that consolidates

the top 10 of each positive / negative feedbacks:

+

performance (80)

nice icons (15)

lay loading (8)

-

everything is perfect (200)

project logo (20)

Sounds good..

As with mobile apps, Eclipse Marketplace Client could propose an

API to Eclipse plug-in to propose to vote directly from the IDE

itself. So, we could receive feedback of real end users, and not

only from the Eclipse's websites visitors.

More tooling needed, so won't fix in the first iteration I guess.

Definitely interesting though.

--

boris

Etienne JULIOT

Vice President, Obeo

Le 22/08/2014 16:57, Boris Baldassari a écrit :

Hiho dear colleagues,

A lot of work has been done recently around the maturity

assessment initiative, and we thought it would be good to let

you know about it to have some great feedback..

* The PolarSys quality model has been improved and formalised.

It is thoroughly presented in the polarsys wiki [1a], with the metrics [1b]

and measurement concepts [1c] used . The architecture of the

prototype [1d] has also been updated, following discussions

with Gaël Blondelle and Jesus Gonzalez-Barahona from Bitergia.

* A nice visualisation of the quality model has been developed

[2] using d3js, which summarises the most important ideas and

concepts. The description of metrics and measurement concepts

has still to be enhanced, but the quality model itself is

almost complete. Please fell free to comment and contribute.

* A github repo has been created [3], holding

all definition files for the quality model itself, metrics and

measurement concepts. It also includes

a set of scripts used to check and manipulate the definition

files, and to visualise some specific parts of the system.

* We are setting up the necessary information and framework

for the rule-checking tools: PMD and FindBugs for now, others

may follow. Rules are classified according to the quality

attributes they impact, which is of great importance to

provide sound advice regarding the good and bad practices

observed in the project.

Help us help you! If you would like to participate and see

what this on-going work can bring to your project, please feel

free to contact me. This is also the opportunity to better

understand how projects work and how we can do better

together, realistically.

Sincerely yours,

--

Boris

[1a] https://polarsys.org/wiki/EclipseQualityModel

[1b] https://polarsys.org/wiki/EclipseMetrics

[1c]

https://polarsys.org/wiki/EclipseMeasurementConcepts

[1d] https://polarsys.org/wiki/MaturityAssessmentToolsArchitecture

[2] http://borisbaldassari.github.io/PolarsysMaturity/qm/polarsys_qm_full.html

[3] https://github.com/borisbaldassari/PolarsysMaturity

_______________________________________________

polarsys-iwg mailing list

polarsys-iwg@xxxxxxxxxxx

To change your delivery options, retrieve your password, or unsubscribe from this list, visit

https://dev.eclipse.org/mailman/listinfo/polarsys-iwg

_______________________________________________

polarsys-iwg mailing list

polarsys-iwg@xxxxxxxxxxx

To change your delivery options, retrieve your password, or unsubscribe from this list, visit

https://dev.eclipse.org/mailman/listinfo/polarsys-iwg

|