[

Date Prev][

Date Next][

Thread Prev][

Thread Next][

Date Index][

Thread Index]

[

List Home]

|

[jetty-users] Jetty thread spins onIncompleteFlush / SslConnection$1.run forever

|

Hi everyone,

We've observed runaway Jetty threads in our production environment.

The remaining threads seem to continue serving traffic just fine, but two of them are totally stuck, seemingly in a spinloop.

We run Jetty 9.4.11.v20180605 on Java 9.0.4

You can see the difference by comparing a couple of our threads:

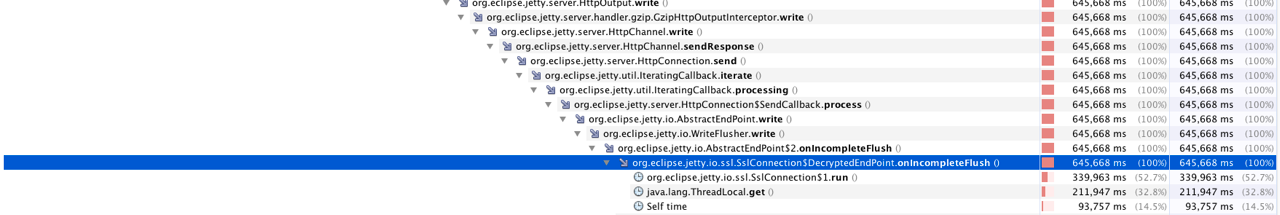

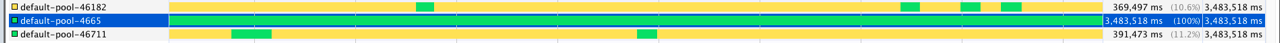

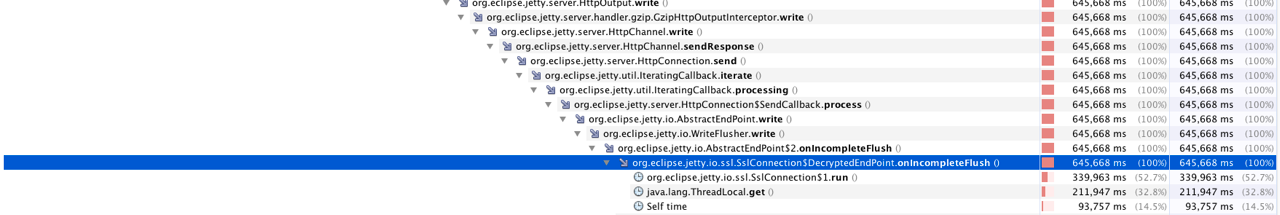

[ The "hung" thread has an order of magnitude more CPU time than any other worker thread ]

By sampling the stacks, I am able to notice a couple of hot spots:

"default-pool-4665" #4665 prio=5 os_prio=0 tid=0x00000000013c5000 nid=0x1258 runnable [0x00007ff9eb1ef000]

java.lang.Thread.State: RUNNABLE

at org.eclipse.jetty.io.ssl.SslConnection$1.run(SslConnection.java:121)

at org.eclipse.jetty.io.ssl.SslConnection$DecryptedEndPoint.onIncompleteFlush(SslConnection.java:545)

at org.eclipse.jetty.io.AbstractEndPoint$2.onIncompleteFlush(AbstractEndPoint.java:54)

at org.eclipse.jetty.io.WriteFlusher.write(WriteFlusher.java:332)

at org.eclipse.jetty.io.AbstractEndPoint.write(AbstractEndPoint.java:380)

at org.eclipse.jetty.server.HttpConnection$SendCallback.process(HttpConnection.java:804)

at org.eclipse.jetty.util.IteratingCallback.processing(IteratingCallback.java:241)

at org.eclipse.jetty.util.IteratingCallback.iterate(IteratingCallback.java:224)

at org.eclipse.jetty.server.HttpConnection.send(HttpConnection.java:538)

at org.eclipse.jetty.server.HttpChannel.sendResponse(HttpChannel.java:795)

"default-pool-4665" #4665 prio=5 os_prio=0 tid=0x00000000013c5000 nid=0x1258 runnable [0x00007ff9eb1ef000]

java.lang.Thread.State: RUNNABLE

at java.lang.ThreadLocal$ThreadLocalMap.getEntryAfterMiss(java.base@9.0.4/ThreadLocal.java:443)

at java.lang.ThreadLocal$ThreadLocalMap.getEntry(java.base@9.0.4/ThreadLocal.java:418)

at java.lang.ThreadLocal$ThreadLocalMap.access$000(java.base@9.0.4/ThreadLocal.java:298)

at java.lang.ThreadLocal.get(java.base@9.0.4/ThreadLocal.java:163)

at org.eclipse.jetty.io.ssl.SslConnection$DecryptedEndPoint.onIncompleteFlush(SslConnection.java:547)

at org.eclipse.jetty.io.AbstractEndPoint$2.onIncompleteFlush(AbstractEndPoint.java:54)

at org.eclipse.jetty.io.WriteFlusher.write(WriteFlusher.java:332)

at org.eclipse.jetty.io.AbstractEndPoint.write(AbstractEndPoint.java:380)

at org.eclipse.jetty.server.HttpConnection$SendCallback.process(HttpConnection.java:804)

at org.eclipse.jetty.util.IteratingCallback.processing(IteratingCallback.java:241)

at org.eclipse.jetty.util.IteratingCallback.iterate(IteratingCallback.java:224)

In a series of dozens of samples, I never saw a stack that was outside AEP.write, every stack trace I got looked like this.

Note how 'ThreadLocal.get' is 1/3 of the time -- this must be a *hot* loop!

I attempted to up the logging level to DEBUG for the WriteFlusher and SslConnection, but unfortunately Jetty

uses this pattern to boost performance in WriteFlusher:

private static final boolean DEBUG = LOG.isDebugEnabled(); // Easy for the compiler to remove the code if DEBUG==false

which means my attempts to increase debugging without restarting were totally ineffective.

(Is the performance boost here worth the inability to change logging levels at runtime? Pretty annoying in this case! And the JIT ought to be able to elide it all the same if it is actually disabled.)

I dumped the heap and inspected the state of the thread directly.

I observe the following state, an arbitrary point-in-time:

WriteFlusher._state = IDLE

HttpConnectionOverHTTP.state = CLOSED

SslConnection$DecryptedEndPoint._state = OPEN

SSLEngineImpl.connectionState = 2 ( DATA )

SocketChannelImpl.closed = false

I verified (with lsof -i and netstat -a) that the socket is no longer known to the kernel (or, at least, nobody is sitting on the HttpConnectionOverHTTP._endPoint._local.port)

To me, it looks like the thread is stuck in a hot loop where it sees an incomplete flush, "tries again". But the connection is not open, so no data is written,

so it makes no forward progress but also seems to never exit.

I note that in DecryptedEndPoint.onIncompleteFlush, the loop preamble checks `isOutputShutdown()` but then we never re-check for a closed socket inside the __tryWriteAgain loop.

Inside SslConnection$1.run, we call WriteFlusher.completeWrite(), which checks things like:

if (previous.getType() != StateType.PENDING)

return; // failure already handled.

If WriteFlusher state is IDLE, this returns immediately. (I can't know what the state was at the time, but I see a number of early exit paths in the code).

So my current hypothesis is that the socket was asynchronously closed in the middle of a retry, such that it neither ever succeeds nor fails, but just burns CPU.

I'm not very familiar with this code, but hopefully someone here can help guide us to understand what is going on. I see that `try_again` is only set in a //TODO block so I worry that we might have hit a bug or unfortunate race condition. In particular,

// Keep running complete write until

__tryWriteAgain.set(Boolean.FALSE);

do

{

_runCompleteWrite.run();

} while (Boolean.TRUE.equals(__tryWriteAgain.get()));

seems that it would possibly never terminate, since we never set __tryWriteAgain inside the do/while, only in a finally outside.

Thanks for any guidance or suggestions!

Best,

Steven |

Attachment:

signature.asc

Description: Message signed with OpenPGP using GPGMail