April 23, 2024

Nuanced String Joining in Java

by Donald Raab at April 23, 2024 06:03 AM

Subtle differences sometimes make a big difference

Starting with an Integer array

I opened up my IntelliJ IDE and created an Integer array. I don’t usually like boxing primitives but wanted to see what interesting things I could discover about Eclipse Collections and Java Stream without immediately jumping into primitive types.

Integer[] numbers = {1, 2, 3, 4, 5};The first question I asked is “What can we do with this that is simple but interesting?“.” We can easily adapt this array in Java as a Stream.

Stream<Integer> stream = Stream.of(numbers);

We can also adapt it as an ArrayAdapter in Eclipse Collections.

ArrayAdapter<Integer> adapted = ArrayAdapter.adapt(numbers);

But what next? This is where our fun begins.

Let’s make a String

I wrote an article for 97 Things Every Java Programmer Should Know with the title “Learn to Kata and Kata to Learn.” In this article, I iterate through some example Java code using Collectors.joining() and String.join() to create a comma separated list of names. Then I turn the exercise into a code kata. I started with a List<String> and asserted some derived result of a joined String would equal the names in the List separated by commas.

This is a trivial example for both Collectors.joining and String.join. The example only involved a delimiter. It did not involve a prefix and a suffix. It also started off with String instances, which as we will see, is the perfect scenario for both Collectors.joining and String.join. Unfortunately, in the many classed world of object oriented programming, not everything starts out as a String.

Our problem today will start with the array of Integers values from 1 to 5 and converted them to a String starting with a “*”, separating elements with “*, *” and then finishing with a “*”.

For Loop

Joining an Integer array into a String with a for loop is a good exercise for us to start with.

@Test

public void loopArrayOfIntegerIntoAString()

{

Integer[] numbers = {1, 2, 3, 4, 5};

StringBuilder builder = new StringBuilder("*");

for (int i = 0; i < numbers.length; i++)

{

if (i > 0)

{

builder.append("*, *");

}

builder.append(numbers[i]);

}

String string = builder.append("*").toString();

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", string);

}

We use StringBuilder here, but I started off with simple String concatenation. IntelliJ recommending applying an automated refactoring to convert the String concatentation to StringBuilder. So I did. The code is fairly simple here to understand. We iterate over the array using indexed access, so that we can check if we are not on the first (0th) element. Only the first element element will not have the “*, *” delimiter before it. A prefix of “*” and a suffix of “*” are added to the StringBuilder before and after iteration begins. The append method in StringBuilder is nice enough to call String.valueOf on each Integer value for us so we don’t have to. In the end, we assert we wind up with a String that matches this string “*1*, *2*, *3*, *4*, *5*”.

Stream.map, collect, and Collectors.joining

For the next implementation, we will use a Stream and Collectors.joining to create the String. Collectors.joining requires that elements are an instance of CharSequence (a parent interface of String). We will map each Integer to a String using String.valueOf before calling Collectors.joining.

@Test

public void streamAnArrayOfIntegerAndMapCollectorsJoining()

{

Integer[] numbers = {1, 2, 3, 4, 5};

// Collectors.joining requires elements to be CharSequence

// delimiter is first, followed by prefix and then suffix

Stream<Integer> stream = Stream.of(numbers);

String streamedJoining = stream

.map(String::valueOf)

.collect(Collectors.joining("*, *", "*", "*"));

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", streamedJoining);

}

This code looks more concise than the for loop. However, it is rather annoying that StringBuilder append calls String.valueOf for us, and Collectors.joining does not. I also found it surprising that we see that joining takes the delimiter first, followed by the prefix and then the suffix. This does not mirror the output of the String.

String.join

For this implementation, we will used String.join. The String.join static method takes a CharSequence delimiter and either an array of CharSequence for the elements, or some kind of Iterable<CharSequence>. We can use the ArrayAdapter class from Eclipse Collections to convert the Integer array to an Iterable<CharSequence>.

@Test

public void adaptAnArrayOfIntegerAndStringJoin()

{

Integer[] numbers = {1, 2, 3, 4, 5};

Iterable<CharSequence> iterable = ArrayAdapter.adapt(numbers)

.asLazy()

.collect(String::valueOf);

// String.join only supports delimiter. Prefix and suffix are concatenated

// Requires elements to be a CharSequence[] or Iterable<CharSequence>

String stringJoin = "*" + String.join("*, *", iterable) + "*";

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", stringJoin);

}

Here we use the ArrayAdapter, convert it to a LazyIterable (which is an Iterable) and collect String instances using String::valueOf. The most surprising thing I didn’t know about String.join is that there are no public overloads that take a prefix and suffix, like Collectors.joining has.

We can also use Stream to turn the Integer array into an Iterable<String>.

@Test

public void streamAnArrayOfIntegerAndStringJoin()

{

Integer[] numbers = {1, 2, 3, 4, 5};

Iterable<String> iterable =

Stream.of(numbers).map(String::valueOf)::iterator;

String stringJoin = "*" + String.join("*, *", iterable) + "*";

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", stringJoin);

}

We use a method reference here of ::iterator on the Stream to convert it to an Iterable. This is a neat trick since Iterable only requires one method to be implemented, which is iterator.

ArrayAdapter.makeString

In the 97 Things article, I did not include a refactoring to use Eclipse Collections makeString. We will see how to use makeString, which is available on all RichIterable subtypes, below.

@Test

public void adaptAnArrayOfIntegerAndMakeString()

{

Integer[] numbers = {1, 2, 3, 4, 5};

ArrayAdapter<Integer> adapted = ArrayAdapter.adapt(numbers);

// makeString does not require elements to be CharSequence

// prefix is first, then delimiter and then suffix

String makeString = adapted.makeString("*", "*, *","*");

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", makeString);

}

Similar to StringBuilder append, makeString applies the call to String.valueOf for us. The order of String parameters here is prefix, delimiter, suffix, which more closely mirrors the expected output.

ArrayIterate.makeString

The simplest solution we will see is using ArrayIterate.makeString from Eclipse Collections. Eclipse Collections has utility classes with the suffix of Iterate. There are Iterate, MapIterate, StringIterate, and ArrayIterate utility classes, that work with Iterable, Map, String, and Object array types respectively.

@Test

public void iterateAnArrayOfIntegerAndMakeString()

{

Integer[] numbers = {1, 2, 3, 4, 5};

String makeString =

ArrayIterate.makeString(numbers, "*", "*, *","*");

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", makeString);

}

The makeString method on the utility classes behaves the same as on RichIterable subtypes. The method does not require the elements in the array to be some type of String or CharSequence already. The prefix comes first after the array parameter, followed by delimiter and then by suffix.

Looking for more nuance? Let’s get primitive.

Instead of an array of Integer, let’s switch to an array of int, and see what options we have to convert to a delimited String.

MutableIntList.makeString

We can convert an int array to a MutableIntList and use makeString on a primitive List.

@Test

public void mutableIntListMakeString()

{

int[] numbers = {1, 2, 3, 4, 5};

MutableIntList adapted = IntLists.mutable.with(numbers);

String makeString = adapted.makeString("*", "*, *", "*");

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", makeString);

}

IntStream.of, boxed and mapToObject

We can create an IntStream using the int array with the static of method, and then box it to Integer using the method boxed. There are no primitive Collectors for primitive Streams, so boxing is our only option.

@Test

public void intStreamBoxedCollectorsJoining()

{

int[] numbers = {1, 2, 3, 4, 5};

Stream<Integer> boxed = IntStream.of(numbers).boxed();

String streamedJoining = boxed

.map(String::valueOf)

.collect(Collectors.joining("*, *", "*", "*"));

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", streamedJoining);

}

We can also get rid of the boxing to Integer, and box straight from int to String using mapToObj with String.valueOf.

@Test

public void intStreamMapToStringCollectorsJoining()

{

int[] numbers = {1, 2, 3, 4, 5};

String string = IntStream.of(numbers)

.mapToObj(String::valueOf)

.collect(Collectors.joining("*, *", "*", "*"));

Assertions.assertEquals("*1*, *2*, *3*, *4*, *5*", string);

}

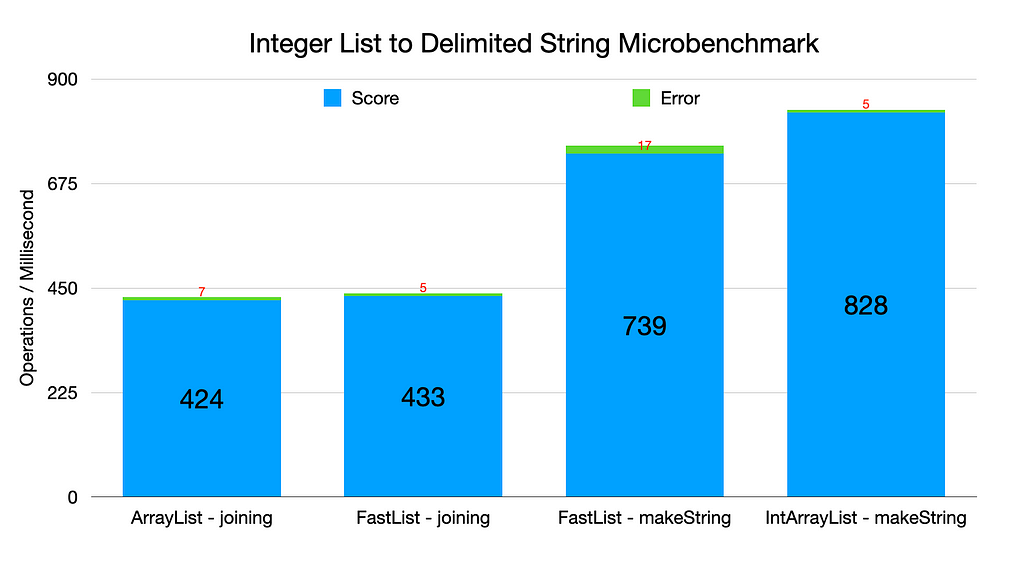

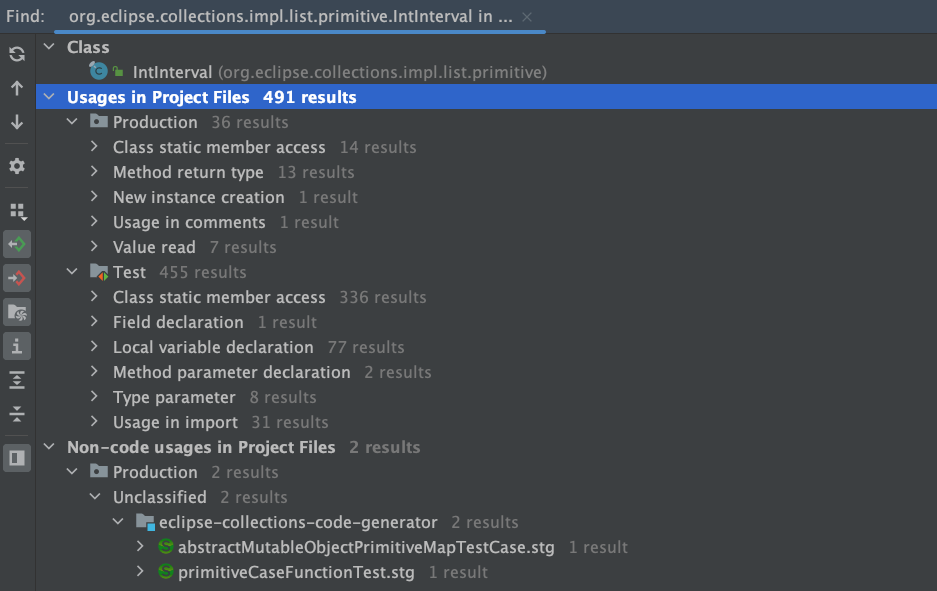

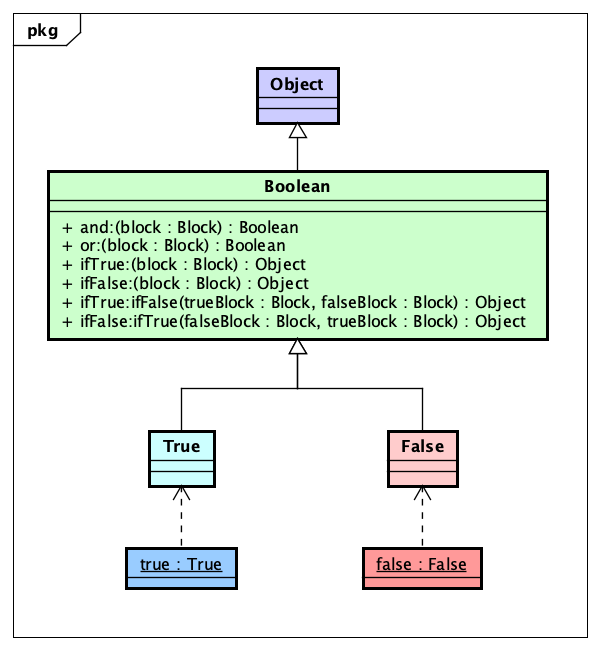

Update: Some Benchmarks

A reader commented that they would be interested in seeing some benchmarks. I was not really interested in spending time writing or running any benchmarks for these method comparisons, but I did find a set of benchmarks that already existed in the Eclipse Collections repo comparing Collectors.joining() on a Stream with makeString() in Eclipse Collections object and primitive types. So I decided to run the benchmarks while drinking some coffee today.

I set the SIZE of the collections to 100. I changed the TimeUnit to MILLISECONDS, so the measurements are in Operations per Millisecond.

I limited the tests to the following methods.

@Benchmark

public String serial_lazy_mapToStringJoining_jdk()

{

// Stream with an ArrayList<Integer> of size 100

return this.integersJDK.stream()

.map(Object::toString)

.collect(Collectors.joining(","));

}

@Benchmark

public String serial_lazy_mapToStringJoining_ec()

{

// Stream with a FastList<Integer> of size 100

return this.integersEC.stream()

.map(Object::toString)

.collect(Collectors.joining(","));

}

@Benchmark

public String serial_eager_makeString_ec()

{

// makeString with a FastList<Integer> of size 100

return this.integersEC.makeString(",");

}

@Benchmark

public String serial_eager_primitiveMakeString_ec()

{

// makeString with IntArrayList of size 100

return this.intList.makeString(",");

}

The results were obtained on my MacBook Pro M2 Max on Sonoma 14.4.1 using Azul Zulu JDK 21 and were as follows.

Benchmark Mode Cnt Score Error Units

serial_eager_makeString_ec thrpt 20 739.087 ± 17.148 ops/ms

serial_eager_primitiveMakeString_ec thrpt 20 828.930 ± 5.360 ops/ms

serial_lazy_mapToStringJoining_ec thrpt 20 433.049 ± 5.491 ops/ms

serial_lazy_mapToStringJoining_jdk thrpt 20 424.496 ± 7.619 ops/ms

The bigger the numbers, the better the performance. I do not personally find these kind of microbenchmark comparisons interesting. All the code examples here are extremely fast, and would only be a bottleneck in a system generating a million calls to these methods per second, or possibly from generating Strings from very large collections. More likely, there will be some other bottleneck in the code.

I prefer makeString because it requires less code and arguably makes the code easier to read. I hope folks would prefer makeString because it takes less code and is more readable, not because makeString is faster in this benchmark run than Collectors.joining(). If anyone finds these isolated microbenchmarks useful and wants to take the time to investigate to understand why the numbers are different, you have the source. Enjoy!

Summary

In this blog we saw how to use several approaches to convert the elements of an Integer array to a delimited String with a prefix and a suffix. The following are the methods we explored.

✅ for loop

✅ Stream.map, collect, and Collectors.joining

✅ String.join

✅ ArrayAdapter.makeString

✅ ArrayIterate.makeString

We then explored converting the Integer array to an int array, and looked at how we can convert that to a delimited String.

✅ MutableIntList.makeString

✅ IntStream.of, boxed and mapToObj

There are nuances to each of these methods. The ArrayIterate approach wound up being the most concise for this particular use case.

Thank you for reading this blog and I hope you find the solutions I shared to this problem informative.

Enjoy!

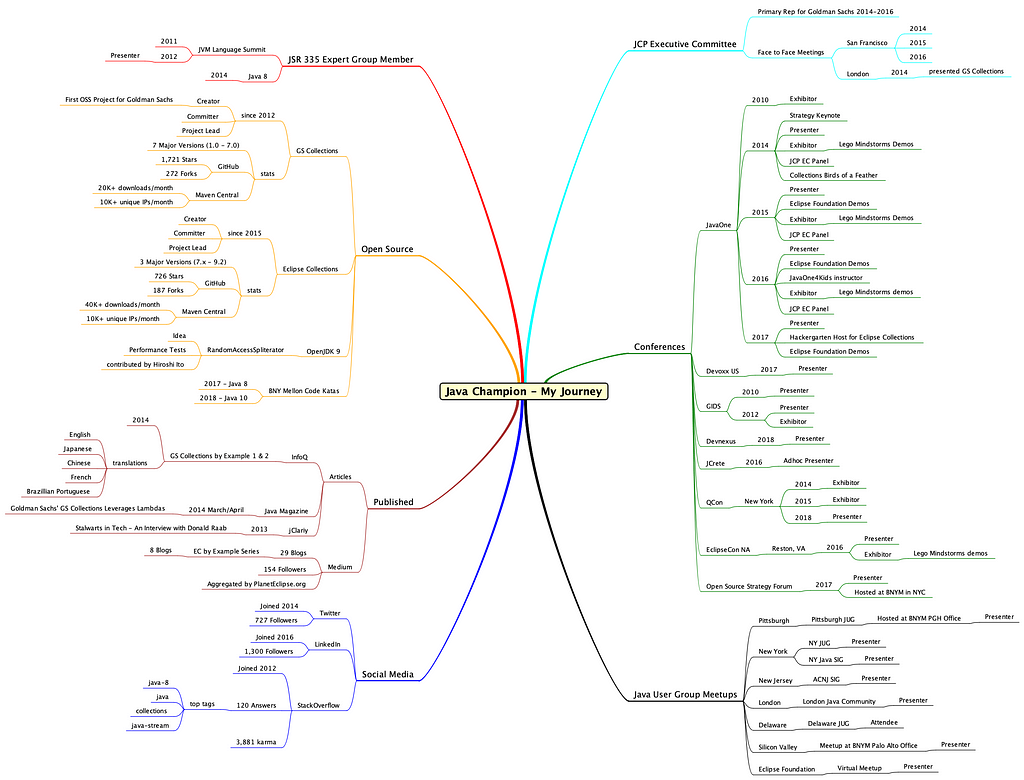

I am the creator of and committer for the Eclipse Collections OSS project, which is managed at the Eclipse Foundation. Eclipse Collections is open for contributions.

Nuanced String Joining in Java was originally published in Javarevisited on Medium, where people are continuing the conversation by highlighting and responding to this story.

April 22, 2024

Call for Papers: eSAAM 2024

by Shanda Giacomoni at April 22, 2024 01:05 PM

Submit your papers for Eclipse SAAM on Data Spaces, a conference that will bring together practitioners and researchers working on innovative software and systems solutions for next-generation mobility. This year the conference is co-located with Open Community Experience and will focus on Security and Privacy, Artificial Intelligence, Architecture, Modelling and related challenges.

April 19, 2024

Haiku Kata using String transform, Text Blocks, and Switch Expressions

by Donald Raab at April 19, 2024 03:44 PM

I solved the Haiku Kata again using more features added since Java 12

Finding String transform

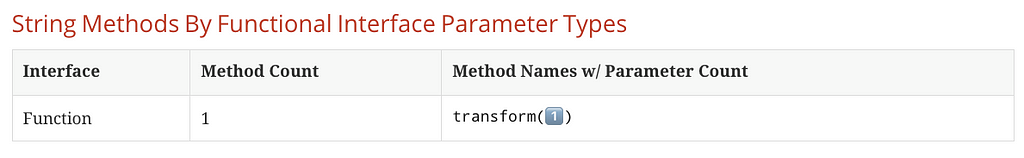

I found a method on the Java String class I hadn’t seen before. I discovered it while running the String class through my Java class kaleidoscope. There is a method that was added in Java 12 named transform.

The transform method makes it possible to pass a Function to a String and have any type returned. In Java, the more popular name for this method is map on Stream and Optional.

I couldn’t understand why this method was added to the String class. This is the only method on String that takes a Functional Interface like Function.

What is the purpose of this method on String?

Instead of scratching my head wondering, I decided to see what I could do with this method.

Haiku Kata

I created a kata for a set of eleven Haiku I wrote during a self-enforced writing detox period I went through in September 2021. I turned my haiku into a Java kata and blogged about it here. José Paumard turned my Haiku Kata into an amazing YouTube tutorial in JEP Café #9.

https://medium.com/media/d954992e8ffb899b6ebe8ff504c2facc/hrefJosé’s JEP Café #9 is currently one of the Top 10 most popular videos on the Java YouTube channel based on views. Congrats José!

Paul King also implemented the Haiku Kata in Groovy last year.

The Haiku Kata was intended to be a way for me to experiment with Java’s Text Block feature. Text Blocks were added as a standard feature in Java 15, and were in preview in Java 13 and 14.

In the Haiku Kata, I adapt a String stored as a Text Block into a CharAdapter class from Eclipse Collections. The code I write to do this looks like this.

CharAdapter chars = Strings.asChars("Hello!");The equivalent using the Java chars method looks like this.

IntStream chars = "Hello!".chars();

With the new transform method on the String class, it is possible to flip this code.

CharAdapter chars = "Hello!".transform(Strings::asChars);

There is something subtle that happens with this inversion of behavior, other than requiring a few more characters. To see the effect, it helps to have bigger String instances than “Hello!”. This is why I went back to the Haiku Kata to try the transform method.

Distinct Letters

One of the first tests to implement in the Haiku Kata is finding the distinct letters in the Tex Block. Using the String transform method, it is possible to inline all of the code to look as follows.

@Test

public void distinctLettersEclipseCollections()

{

String distinctLetters = """

Breaking Through Pavement Wakin' with Bacon Homeward Found

---------------- -------- ----------------- --------------

The wall disappears Beautiful pavement! Wakin' with Bacon House is where I am

As soon as you break through the Imperfect path before me On a Saturday morning Home is where I want to be

Intimidation Thank you for the ride Life’s little pleasures Both may be the same

Winter Slip and Slide Simple Nothings With Deepest Regrets

--------------------- --------------- --------------------

Run up the ladder A simple flower With deepest regrets

Swoosh down the slide in the snow Petals shine vibrant and pure That which you have yet to write

Winter slip and slide Stares into the void At death, won't be wrote

Caffeinated Coding Rituals Finding Solace Curious Cat Eleven

-------------------------- -------------- ----------- ------

I arrange my desk, Floating marshmallows I see something move This is how many

refactor some ugly code, Cocoa brewed hot underneath What it is, I am not sure Haiku I write before I

and drink my coffee. Comfort in a cup Should I pounce or not? Write a new tech blog.

"""

.transform(Strings::asChars)

.select(Character::isAlphabetic)

.collectChar(Character::toLowerCase)

.distinct()

.toString();

Assertions.assertEquals("breakingthoupvmwcdflsy", distinctLetters);

}

The transform method works better here than the original form which would require wrapping the entire text block in a method call to Strings.asChars().

This is what it would have looked like without the transform method.

@Test

public void distinctLettersEclipseCollectionsWithoutTransform()

{

String distinctLetters = Strings.asChars("""

Breaking Through Pavement Wakin' with Bacon Homeward Found

---------------- -------- ----------------- --------------

The wall disappears Beautiful pavement! Wakin' with Bacon House is where I am

As soon as you break through the Imperfect path before me On a Saturday morning Home is where I want to be

Intimidation Thank you for the ride Life’s little pleasures Both may be the same

Winter Slip and Slide Simple Nothings With Deepest Regrets

--------------------- --------------- --------------------

Run up the ladder A simple flower With deepest regrets

Swoosh down the slide in the snow Petals shine vibrant and pure That which you have yet to write

Winter slip and slide Stares into the void At death, won't be wrote

Caffeinated Coding Rituals Finding Solace Curious Cat Eleven

-------------------------- -------------- ----------- ------

I arrange my desk, Floating marshmallows I see something move This is how many

refactor some ugly code, Cocoa brewed hot underneath What it is, I am not sure Haiku I write before I

and drink my coffee. Comfort in a cup Should I pounce or not? Write a new tech blog.

""")

.select(Character::isAlphabetic)

.collectChar(Character::toLowerCase)

.distinct()

.toString();

Assertions.assertEquals("breakingthoupvmwcdflsy", distinctLetters);

}

It is harder to determine what type the select method is being applied to as you have to scan up to the top of the text block to see the String.asChars( opening.

Top Three Letters

The test to find the top three letters in the haiku can be rewritten using a single fluent line of code, by using the Switch Expressions feature of Java to make the test Assertions part of a call to forEachWithindex.

@Test

public void topLettersEclipseCollections()

{

"""

Breaking Through Pavement Wakin' with Bacon Homeward Found

---------------- -------- ----------------- --------------

The wall disappears Beautiful pavement! Wakin' with Bacon House is where I am

As soon as you break through the Imperfect path before me On a Saturday morning Home is where I want to be

Intimidation Thank you for the ride Life’s little pleasures Both may be the same

Winter Slip and Slide Simple Nothings With Deepest Regrets

--------------------- --------------- --------------------

Run up the ladder A simple flower With deepest regrets

Swoosh down the slide in the snow Petals shine vibrant and pure That which you have yet to write

Winter slip and slide Stares into the void At death, won't be wrote

Caffeinated Coding Rituals Finding Solace Curious Cat Eleven

-------------------------- -------------- ----------- ------

I arrange my desk, Floating marshmallows I see something move This is how many

refactor some ugly code, Cocoa brewed hot underneath What it is, I am not sure Haiku I write before I

and drink my coffee. Comfort in a cup Should I pounce or not? Write a new tech blog.

"""

.transform(Strings::asChars)

.select(Character::isAlphabetic)

.collectChar(Character::toLowerCase)

.toBag()

.topOccurrences(3)

.forEachWithIndex((each, index) -> Assertions.assertEquals(switch (index)

{

case 0 -> PrimitiveTuples.pair('e', 94);

case 1 -> PrimitiveTuples.pair('t', 65);

case 2 -> PrimitiveTuples.pair('i', 62);

default -> null;

}, index < 3 ? each : null));

}

The method forEachWithIndex is one of the lesser known methods available in Eclipse Collections. The equivalent Assertion code before required calling Assertions.assertEquals three separate times with an indexed lookup into the resulting ListIterable returned from topOccurrences.

Duplicates and Uniques

There wasn’t much to change in the duplicates and uniques code, other than inlining Text Block in the test and using the String transform method.

@Test

public void duplicatesAndUniqueEclipseCollections()

{

MutableCharBag chars = """

Breaking Through Pavement Wakin' with Bacon Homeward Found

---------------- -------- ----------------- --------------

The wall disappears Beautiful pavement! Wakin' with Bacon House is where I am

As soon as you break through the Imperfect path before me On a Saturday morning Home is where I want to be

Intimidation Thank you for the ride Life’s little pleasures Both may be the same

Winter Slip and Slide Simple Nothings With Deepest Regrets

--------------------- --------------- --------------------

Run up the ladder A simple flower With deepest regrets

Swoosh down the slide in the snow Petals shine vibrant and pure That which you have yet to write

Winter slip and slide Stares into the void At death, won't be wrote

Caffeinated Coding Rituals Finding Solace Curious Cat Eleven

-------------------------- -------------- ----------- ------

I arrange my desk, Floating marshmallows I see something move This is how many

refactor some ugly code, Cocoa brewed hot underneath What it is, I am not sure Haiku I write before I

and drink my coffee. Comfort in a cup Should I pounce or not? Write a new tech blog.

"""

.transform(Strings::asChars)

.select(Character::isAlphabetic)

.collectChar(Character::toLowerCase)

.toBag();

CharBag duplicates = chars.selectDuplicates();

CharSet unique = chars.selectUnique();

Assertions.assertEquals(chars, duplicates);

Assertions.assertEquals(CharSets.immutable.empty(), unique);

}

Final Thoughts

I can’t tell yet if the String transform method has interesting uses that I haven’t thought of yet, but I was happy to see how it worked with the the Haiku Kata. In the original version of the kata, I stored the text block into a field called haiku so as to keep the huge text block out of the methods, taking away from the method calls. The transform method created a nice separation and progression between the inlined String Text Block and the transformation code that found the distinct, top three, duplicate and unique characters.

Thanks for reading, and I hope you enjoyed learning about my recent discovery of the String transform method.

I am the creator of and committer for the Eclipse Collections OSS project, which is managed at the Eclipse Foundation. Eclipse Collections is open for contributions.

Haiku Kata using String transform, Text Blocks, and Switch Expressions was originally published in Javarevisited on Medium, where people are continuing the conversation by highlighting and responding to this story.

April 18, 2024

The Eclipse Foundation Unveils Open Community Experience (OCX), Europe’s Premier Event for Open Source Innovation

by Jacob Harris at April 18, 2024 11:00 AM

BRUSSELS – 18 April 2024 – The Eclipse Foundation, one of the world’s largest open source foundations, is proud to announce the launch of Open Community Experience (OCX), a transformative open source developer conference set to take place 22-24 October 2024 in Mainz, Germany. This inaugural event will be a catalyst for open collaboration and innovation, covering a diverse range of community-curated open source topics, including automotive & mobility, embedded IoT & edge, cloud native Java, languages and runtimes, supply chain security, dataspaces, and open source software development best practices.

“As our communities have continued to grow and thrive, it became clear that we needed to create a new event that matched the momentum behind our growing ecosystem, as well as the diversity of its interests and collaborations,” said Mike Milinkovich, executive director of the Eclipse Foundation. “OCX has been built to serve as the premier event in Europe for developers and technology leaders worldwide engaged in open source ecosystems to meet, share ideas and experiences, and learn from each other.”

Set in the historic city of Mainz, Germany, near Frankfurt, OCX will offer an array of tracks covering Eclipse projects and a wide range of topics relevant to open source developers and practitioners. In addition to the main conference tracks, OCX will also host three multi-day, collocated events, each dedicated to specific communities: Open Community for Automotive, Open Community for Java, and EclipseCon.

The planned lineup includes:

- OCX Main Tracks

- Embedded, IoT & Edge

- Languages & Runtimes

- OSS Supply Chain Security

- Open Collaboration Best Practices

- Open Community for Automotive: dedicated to automotive software, software defined vehicles, and mobility.

- Open Community for Java: focusing on topics related to Jakarta EE, Adoptium, MicroProfile, open source enterprise Java, and more.

- EclipseCon: the centre of gravity for all topics related to the Eclipse IDE platform and next-generation cloud-based development tools.

In addition to the collocated three-day events, the program will feature specialised sessions and one day gatherings like the Eclipse Security, Artificial Intelligence, Architecture, and Modelling Conference (eSAAM) on 22 October 2024, which will spotlight innovative software and solutions for data spaces.

OCX offers the ideal opportunity to engage with developers, executives, and evangelists shaping today’s most advanced open technologies. Whether you aim to showcase your own solutions, expand your organisation’s role in the developer ecosystem, or connect with potential partners for new opportunities, OCX is Europe's premier venue for developer innovation. We are now actively seeking contributions from sponsors, members, potential speakers, and more. Join us in shaping the future of open source collaboration at OCX!

OCX is made possible with the generous support of our sponsors, and we extend our sincere gratitude to each of them. A special acknowledgement goes to Huawei, our first Diamond-level sponsor, whose support sets a high standard for excellence. We also appreciate the early sponsorship commitment from EclipseSource, Lunatech, Obeo, Scanoss, and Typefox. Your contributions make OCX a reality and greatly enhance the experience for all attendees

We encourage all companies in the Eclipse ecosystem, particularly our Strategic members, to consider sponsoring our flagship conference. This is a unique opportunity to build your brand and directly engage a highly qualified developer audience focused explicitly on the open source technologies that drive your business. Sponsor packages include multiple event passes, making it easy for your developers to join the many conversations to be had at this unique confluence of forward-thinking technologists. Explore sponsorship opportunities by reaching out to sponsors@OCXconf.org.

For more details on OCX and how you can sponsor and participate, visit the OCX website. If you would like to submit a talk for consideration, the Call for Proposals is now open, with an early bird deadline of 31 May 2024.

About the Eclipse Foundation

The Eclipse Foundation provides our global community of individuals and organisations with a business-friendly environment for open source software collaboration and innovation. We host the Eclipse IDE, Adoptium, Software Defined Vehicle, Jakarta EE, and over 415 open source projects, including runtimes, tools, specifications, and frameworks for cloud and edge applications, IoT, AI, automotive, systems engineering, open processor designs, and many others. Headquartered in Brussels, Belgium, the Eclipse Foundation is an international non-profit association supported by over 360 members. Visit us at this year’s Open Community Experience (OCX) conference on 22-24 October 2024 in Mainz, Germany. To learn more, follow us on social media @EclipseFdn, LinkedIn, or visit eclipse.org.

Third-party trademarks mentioned are the property of their respective owners.

###

Media contacts:

Schwartz Public Relations for the Eclipse Foundation, AISBL (Germany)

Gloria Huppert/Julia Rauch

Sendlinger Straße 42A

80331 Munich

EclipseFoundation@schwartzpr.de

+49 (89) 211 871 -70/-43

Nichols Communications for the Eclipse Foundation, AISBL

Jay Nichols

+1 408-772-1551

514 Media Ltd for the Eclipse Foundation, AISBL (France, Italy, Spain)

Benoit Simoneau

M: +44 (0) 7891 920 370

April 17, 2024

OCX Call for Proposals Now Open!

by Shanda Giacomoni at April 17, 2024 04:03 PM

Do you have insights, experiences, or innovations to share? We're currently accepting submissions for our new Open Community Experience (OCX) conference and it's collocated events. Submit your proposal now and be part of shaping the OCX 2024 agenda.

April 12, 2024

Enhancing Modeling Tools with AI: A Leap Towards Smarter Diagrams with Eclipse GLSP

by Jonas, Maximilian & Philip at April 12, 2024 12:00 AM

The integration of Artificial Intelligence (AI) with IDEs, as exemplified by tools like Github Copilot, Codeium, Tabine, ChatGPT and more, has opened new horizons in software development. It is …

The post Enhancing Modeling Tools with AI: A Leap Towards Smarter Diagrams with Eclipse GLSP appeared first on EclipseSource.

April 11, 2024

OCX 2024: Celebrating Community, Code and Collaboration

by Clark Roundy at April 11, 2024 06:25 PM

TL;DR - Don't miss the opportunity to participate in Open Community Experience 2024, a new conference for our vibrant community of communities.

At the Eclipse Foundation, our ethos is anchored in three pivotal Cs: Community, Code, and Collaboration. These principles are so integral to our mission that when we re-envisioned our flagship event for 2024, we aimed to include these themes. As a code-first community, we initially introduced our revamped event as the Open Code Experience (OCX). However, after careful reflection and, in full disclosure, due to some potential concerns related to trademark considerations, we embraced a name that truly resonates with our core mission: the Open Community Experience.

Communities are at the core of the Eclipse Foundation. OCX 2024 will showcase a diverse range of excellent content across multiple tracks from the Eclipse ecosystem. In addition to the main conference tracks, OCX will also host three multi-day, collocated events, each dedicated to specific communities: Open Community for Automotive, Open Community for Java, and EclipseCon.

Our program committees are currently hard at work building an outstanding program, but here's a glimpse of what to expect:

- Main OCX Tracks: Content covering embedded systems, IoT and edge computing, programming languages and runtimes, security, trusted data sharing, and open source best practices.

- Open Community for Automotive: Focused on innovations in automotive software, software-defined vehicles, and mobility domains.

- Open Community for Java: A deep dive into the open source enterprise Java ecosystem, featuring content related to Jakarta EE, Adoptium, MicroProfile, and much more.

- EclipseCon: Reinvented as the nexus for all talks related to the Eclipse IDE platform and the evolving landscape of development tools.

In addition to the collocated multi-day events, the program will feature specialised sessions and one day gatherings like the Eclipse Security, Artificial Intelligence, Architecture, and Modelling Conference (eSAAM), which will spotlight innovative software and solutions for data spaces.

OCX is made possible with the generous support of our sponsors, and we extend our sincere gratitude to each of them. A special acknowledgement goes to Huawei, our first Diamond-level sponsor, whose support sets a high standard for excellence. We also appreciate the early sponsorship commitments from EclipseSource, Lunatech, Obeo, Scanoss, and Typefox. Your contributions make OCX a reality and greatly enhance the experience for all attendees.

We encourage all companies in the Eclipse ecosystem, particularly our Strategic members, to consider sponsoring our flagship conference. This is a unique opportunity to build your brand and directly engage a highly qualified developer audience focused explicitly on the open source technologies that drive your business. Sponsor packages include multiple event passes, making it easy for your developers to join the many conversations to be had at this unique confluence of forward-thinking technologists. Explore sponsorship opportunities by reaching out to sponsors@OCXconf.org.

OCX isn't just an event — it's a celebration of community-driven collaboration and open source innovation. We invite you to join us on this exciting journey. For more details on OCX and how you can sponsor and participate, visit the OCX website. And, be sure to mark your calendars for the Call for Proposals opening on April 15.

Stay tuned for more exciting news and announcements in the coming weeks!

April 09, 2024

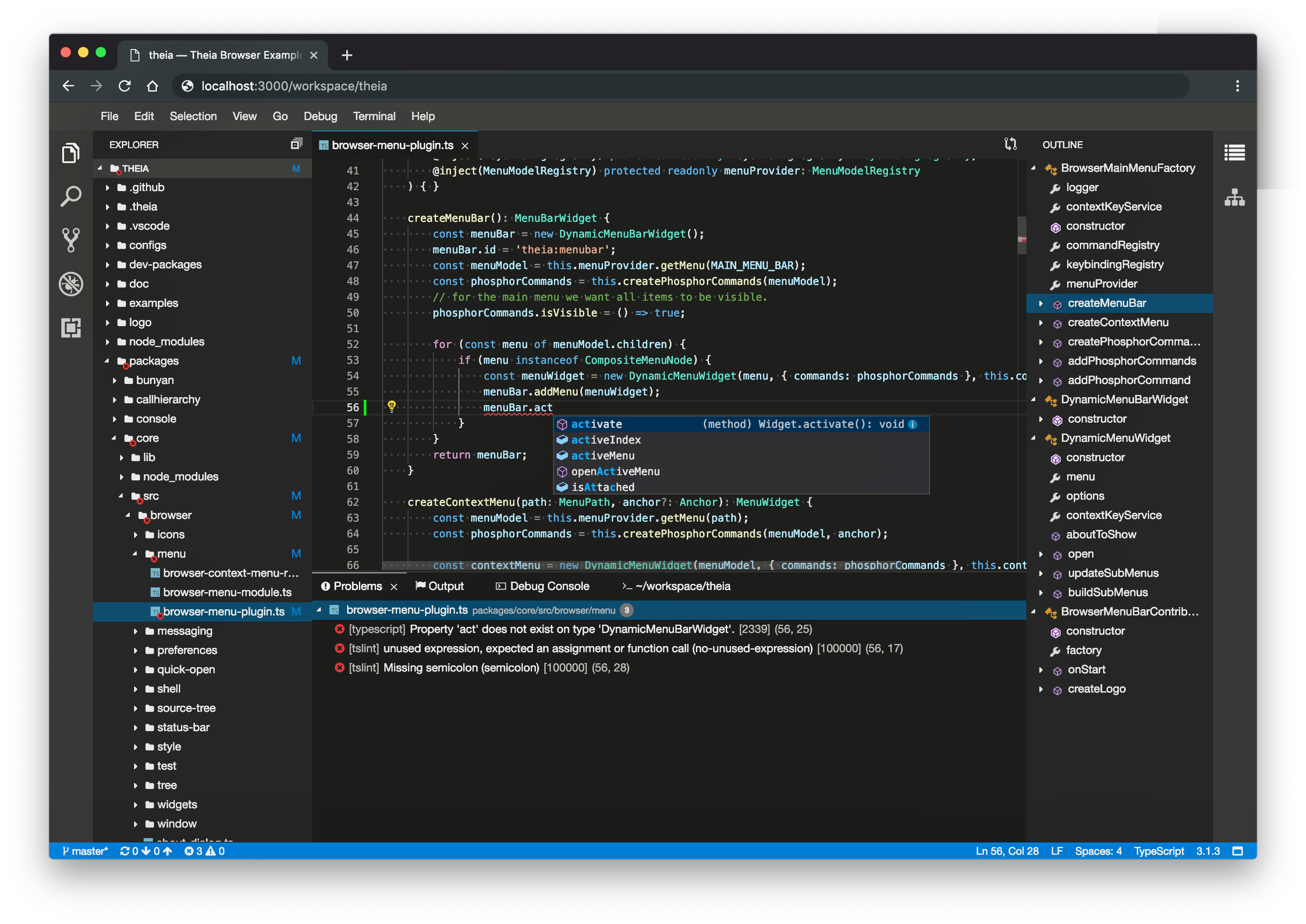

Eclipse Theia 1.48 Release: News and Noteworthy

by Jonas, Maximilian & Philip at April 09, 2024 12:00 AM

We are happy to announce the Eclipse Theia 1.48 release! The release contains 53 merged pull requests and we welcome two new contributors. In this article we will highlight some selected improvements …

The post Eclipse Theia 1.48 Release: News and Noteworthy appeared first on EclipseSource.

April 06, 2024

Looking at a Java Class and its Methods Through a Kaleidoscope

by Donald Raab at April 06, 2024 06:44 PM

Exploring the Java Stream methods from multiple perspectives

Six Views of a Java Class

I have been exploring different ways of understanding the methods of large Java classes. I don’t mean large in terms of lines of code, but large in terms of number of methods. Feature-rich interfaces, sometimes referred to as humane interfaces, are not incredibly humane when you need to find a method, if you don’t know the name, or discover patterns and look for symmetry between types. Thankfully, we have computers to help us process large amounts of data very quickly. Class and Method declarations are an easily accessible treasure trove of information about libraries and applications that Java developers have at their fingertips. Unfortunately, beyond good old Javadoc we don’t have many ways to make them talk.

I’ve created some basic documentation tools for increasing my own understanding of the feature-rich API of Eclipse Collections. Of course, I use Eclipse Collections to help me understand Eclipse Collections. The tools I am creating allow me to understand any Java Class and its Method instances, not just Eclipse Collections classes. One might consider the tools I have been experimenting with as a kind of Javadoc++. I am writing this blog in the hopes it might confirm or deny the usefulness of some of these class/method views.

I’m also hoping this blog will bring more attention and interest to a user-defined method grouping feature that is missing in Java, that I learned from Smalltalk, and is known as Method Categories. Imagine being able to optionally tag each Method in a Java Class with something like methodCategory=”filtering” or methodCategory=”transforming” and then having JavaDoc, IDEs, and developers able to query this information through the Method interface. I digress. Back to the views and groupings that I was able to create without any new Java features.

I have created five AsciiDoc generated views of a class and its methods to help me understand how the methods on the class are organized and if any interesting patterns exist. These views can help me understand class scope, naming patterns and any symmetry/asymmetry that might exist.

The six views of a Java class we will see in this blog are as follows:

- Javadoc

- Methods by First Letter

- Methods by Prefix

- Methods by Suffix

- Methods by Return Type

- Methods by Functional Interface Parameter Types

For views two through six, I used Eclipse Collections to group and count methods by different attributes based on the Class and Method types in Java.

There is no user-defined grouping mechanism for Java methods today, which would be the equivalent of method categories in Smalltalk. I used the available metadata about a Method to build groupings of methods to create these additional views of methods using generated AsciiDoc. I hosted the generated AsciiDoc for the Java Stream class in gists. I linked to the gists below and used GitHub’s ability to render AsciiDoc to have decent looking tables displayed inline.

Enjoy the following views of the Java Stream class and its methods!

Six Views of Java Stream

We will explore the six views of a Java class using Java Stream as an example.

1. Javadoc

The first view of Stream we can find online is the Javadoc for the class.

The Javadoc view shows us all the documentation about the class, and organizes a method summary with all methods listed in alphabetical order. While this may be suitable for some purposes, it does not help us find patterns in the methods, because there is only a flat view without any particular grouping. We can filter methods by static methods, instance methods, abstract methods, and default methods.

We can use the useful search widget in the top right corner to find things. There is also an interesting “Use” tab that can show us the places in the JDK where Java Stream is used.

Uses of Interface java.util.stream.Stream (Java SE 21 & JDK 21)

2. Stream Methods by First Letter

This is the simplest view of methods on a class. I use Eclipse Collections to group all of the methods of the Stream class by their first letter, and sort them by the most frequent first letter to the least frequent.

In each letter, the methods are sorted alphabetically. The benefit of this view is the method compression. In the Javadoc view for Stream, you have to scroll to see all of the methods. With this view, there is no scrolling on either my 27 inch monitor or laptop screen.

https://medium.com/media/49b7a53df8af7eb8c79e3bd6df9420da/href3. Stream Methods by Prefix

This view is slightly more interesting than grouping by first letter. Here we group the methods by any prefix they might have like “map” or “for”. The prefix is determined by the split between the initial lowercase letters in the method and the first uppercase letter. Methods with no prefix, or having all lowercase letters, show up in a category of “No Prefix”. This category shows up first because the table is sorted by the most frequent prefix to the least frequent prefix. Prefix does grouping/screen space compression and also serves as a rough approximation of categories for a majority of methods.

https://medium.com/media/7d4b7898ae0bfafd1acff19efa0199ec/href4. Stream Methods by Suffix

This view is an interesting variation on the grouping by prefix. Here we group the methods by any suffix they might have like “int” or “match”. The suffix is determined by the split between the last uppercase letter and the remaining lowercase letters in the method. Methods with no suffix, or having all lowercase letters, show up in a category of “No Suffix”. This category shows up first because the table is sorted by the most frequent suffix to the least frequent suffix.

https://medium.com/media/47cbdbd4061946f918b7585c32da8c80/href5. Stream Methods by Return Type

In this view, which has methods grouped by their return types, we get to see a bit more information about each method. We can see the methods with their parameter types. This necessarily takes up a bit more screen real estate, but the extra information is potentially useful. The table is sorted by most frequent return type to least frequent return type. As we can see, Stream is the most frequent return type from Stream, which means there are a decent number of lazy methods on the Stream interface. There are also four BaseStream methods and nine primitive stream (IntStream, LongStream, DoubleStream) methods which are also lazy.

https://medium.com/media/61700f8d2181d268db2a848bc067e5b1/href6. Stream Methods by Functional Interface Parameter Types

This was the most complicated of the views to produce. In this view, we can see the methods, with their number of parameters as an emoji, grouped by each of the parameter types the methods take. The methods are filtered to only include parameter types that are Functional Interfaces (e.g. Predicate, Function, Consumer). This view tells us which methods are lambda ready, and which Functional Interface has the most methods with it as a parameter type. The parameter number was included here, because as we can see on the BinaryOperator row in the table, there are three overloaded versions of reduce.

This view can quickly answer questions like “Where can I use a Predicate with a Stream?” or “Where can I use a Function with a Stream?”

https://medium.com/media/1b5873c26da2b9dc28168c48a44f861c/hrefComparing Stream and RichIterable Lambda Enabled Methods

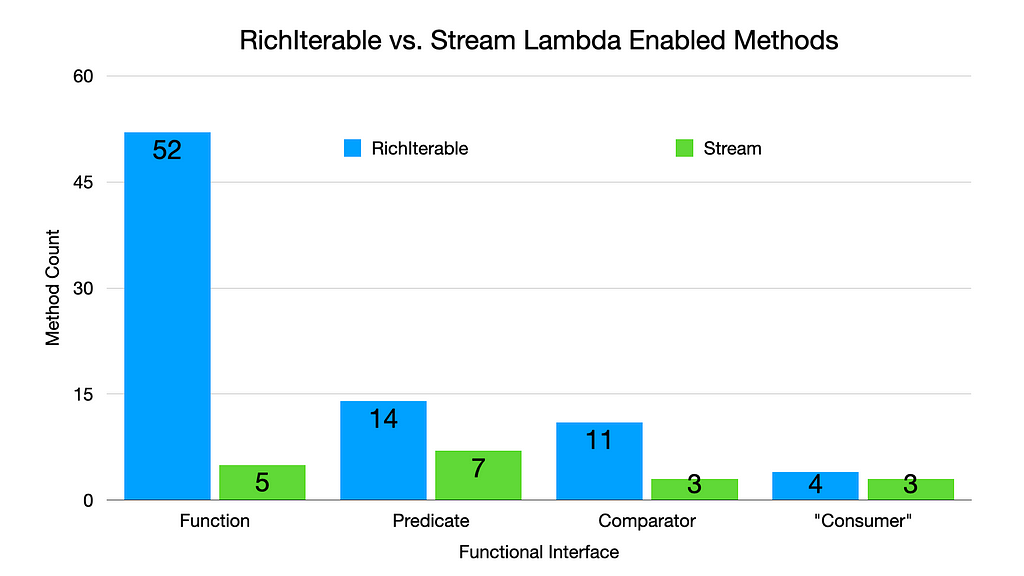

The sixth view of the Stream interface methods allowed me to compare how lambda ready Stream is compared to the RichIterable interface from Eclipse Collections, based on the number of methods that accept the four most well known Functional Interfaces as parameters.

Consumer is quoted in the above chart as the equivalent type in Eclipse Collections is named Procedure.

If you feel like there are some useful methods missing in Stream, you might be able to find them in RichIterable. The detailed view of RichIterable Methods by Functional Interface Parameter Types can be seen below.

https://medium.com/media/2d7fa8c9d57e26186324a3f382e60fd8/hrefFinal Thoughts

Sometimes it’s helpful to build tools to help us augment our understanding of the Java classes and methods we use. Java gives us the capability to query Classes and Methods for a lot of useful information in code. Looking for everything in files can necessitate a lot of scrolling and testing of our memory. Information chunking is extremely helpful for humans. Grouping and Filtering are great options for aiding information chunking.

This is the first time I have used AsciiDoc in a gist included in a Medium blog. I was pleasantly surprised by the automatic rendering of the AsciiDoc tables by GitHub. Now that I know this is possible, I may use AsciiDoc tables in gists instead of screen captures for tables in the future.

I hope you found this blog and the five AsciiDoc generated Class/Method views of the Stream class in Java useful. Please share this blog with others who you think may benefit from learning more about the method naming and organization on the Java Stream class!

Enjoy!

I am the creator of and committer for the Eclipse Collections OSS project, which is managed at the Eclipse Foundation. Eclipse Collections is open for contributions.

Looking at a Java Class and its Methods Through a Kaleidoscope was originally published in Javarevisited on Medium, where people are continuing the conversation by highlighting and responding to this story.

April 04, 2024

The Eclipse Foundation to Highlight Open Innovation at embedded world 2024

by Jacob Harris at April 04, 2024 11:00 AM

BRUSSELS – 4 April 2024 – The Eclipse Foundation, one of the world’s largest open source foundations, is set to unveil its latest open source innovations at this year’s embedded world Exhibition & Conference, taking place in Nuremberg from 9-11 April 2024. The foundation will be presenting talks on multiple embedded topics, while also highlighting new open source technologies, including a safety-certified real-time operating system (RTOS), industrial grade RISC-V CPU IP, and Software-Defined Vehicle (SDV) innovations.

“Embedded computing, the bedrock of today’s digital economy, thrives on open source technologies,” said Mike Milinkovich, executive director of the Eclipse Foundation. “The Eclipse Foundation is experiencing remarkable growth across various aspects of embedded innovation, spanning IoT, edge computing, and our Software Defined Vehicle initiatives. We’re particularly excited to showcase the first release of Eclipse ThreadX at this year’s Embedded World.”

The First Release of Eclipse ThreadX

Eclipse ThreadX, formerly Azure RTOS, is the world's first open source, safety-certified real-time operating system (RTOS). ThreadX is a new addition to the world of open source, having recently become a project at the Eclipse Foundation after its transition from Microsoft. embedded world 2024 serves as the launchpad for the debut release of ThreadX under the stewardship of the Eclipse Foundation. Eclipse ThreadX v6.4.1 is available under the permissive MIT licence. You can download it and its subcomponents from GitHub.

Innovation for Software-Defined Vehicles

The Eclipse SDV team will showcase groundbreaking business use cases with SDV Blueprints, spotlighting advancements in insurance, fleet management, and software orchestration. These initiatives represent a collaborative effort across more than 20 automotive open source projects, highlighting a collective effort to push the boundaries of software-defined vehicles.

Industrial-Grade, Open Source RISC-V CPU Technology

The OpenHW Group will be demonstrating its latest cores based on RISC-V open source technology. The CVA6, CVE4, and CVE2 cores exemplify the pinnacle of open source innovation and adoption in the embedded industry.

Cutting-Edge Research and New Open Source Projects

The Eclipse Research team will unveil four new projects that are advancing the future of technology:

- CODECO, a cognitive, cross-layer, and highly adaptive Edge-Cloud management framework

- TRISTAN, an industrialisation of the European RISC-V ecosystem that is capable of competing with existing commercial alternatives

- TRANSACT, a universal, distributed solution architecture for the transformation of security-critical cyber-physical systems

- NEPHELE, a reliable and secure end-to-end orchestration of hyper-distributed applications on a programmable infrastructure.

Advancing European Technology Leadership with Eclipse Aidge

With the backing of CEA List, the Eclipse Aidge project stands out as a testament to European and French technological leadership. It embodies the successful transition from academic research to real-world applications, showcasing the symbiotic relationship between academia and embedded industry. Connect with the CEA List team to learn more about Eclipse Aidge.

Get to Know Our Community

Discover the diverse Eclipse Foundation ecosystem catering to the IoT, edge, and embedded industries. Representatives from key Eclipse communities such as Eclipse IoT, Sparkplug, and Oniro will all be available to engage with you.

Must-Attend Technical Talks

Eclipse Foundation experts will have a strong presence at this year’s embedded world, including two specific presentations that anyone interested in open source technologies will not want to miss:

- Frédéric Desbiens: Open Source Software and Lifecycle Standards – Yes: It Can Be Done (Tuesday, April 9, 11:30 AM - 12:00 PM, NCC Ost, Session 6.1)

- Frédéric Desbiens: The State of Open Source Real-Time Operating Systems (Wednesday, April 10, 11:00 AM - 11:30 AM, NCC Ost, Session 3.4)

Join Us at Booth 4-554, Hall 4:

Discover the transformative power of open source innovation by visiting the Eclipse Foundation at booth 4-554, Hall 4. Engage with our vibrant communities, explore industry-leading projects, and discover how open source initiatives are reshaping technology. We look forward to seeing you there!

About the Eclipse Foundation

The Eclipse Foundation provides our global community of individuals and organisations with a business-friendly environment for open source software collaboration and innovation. We host the Eclipse IDE, Adoptium, Software Defined Vehicle, Jakarta EE, and over 415 open source projects, including runtimes, tools, specifications, and frameworks for cloud and edge applications, IoT, AI, automotive, systems engineering, open processor designs, and many others. Headquartered in Brussels, Belgium, the Eclipse Foundation is an international non-profit association supported by over 360 members. Visit us at this year’s Open Code Experience (OCX) conference on 22-24 October 2024 in Mainz, Germany. To learn more, follow us on social media @EclipseFdn, LinkedIn, or visit eclipse.org.

Third-party trademarks mentioned are the property of their respective owners.

###

Media contacts:

Schwartz Public Relations for the Eclipse Foundation, AISBL (Germany)

Gloria Huppert/Julia Rauch

Sendlinger Straße 42A

80331 Munich

EclipseFoundation@schwartzpr.de

+49 (89) 211 871 -70/-43

Nichols Communications for the Eclipse Foundation, AISBL

Jay Nichols

+1 408-772-1551

514 Media Ltd for the Eclipse Foundation, AISBL (France, Italy, Spain)

Benoit Simoneau

M: +44 (0) 7891 920 370

Eclipse Foundation Security Advisory: HTTP CONTINUATION frames issue

by Marta Rybczynska at April 04, 2024 04:24 AM

The Eclipse Foundation Security Team has been made aware of the vulnerability VU#421644 affecting multiple HTTP/2 implementations, that could cause an out-of-memory crash.

April 03, 2024

Showcasing Open Innovation at embedded world 2024: An Eclipse Foundation Preview

by Clark Roundy at April 03, 2024 11:33 PM

With embedded world 2024 just around the corner, we're thrilled to offer a sneak peek into the groundbreaking projects and innovations that are shaping the future, all thanks to the collaborative efforts of the Eclipse Foundation and our vibrant community.

Join us in Nuremberg April 9th to 11th at booth 4-554, Hall 4, as we unveil a diverse spectrum of open source projects and technologies spanning the entire embedded ecosystem. At the Eclipse Foundation booth, you'll have the opportunity to explore the world of open source collaboration and witness firsthand its transformative power.

Here are a few things to expect and explore when you visit our booth:

Software Defined Vehicle Innovation

The Eclipse SDV team will showcase groundbreaking business use cases with SDV Blueprints, spotlighting advancements in insurance, fleet management, and software orchestration. These initiatives represent a collaborative effort across more than 20 automotive open source projects, highlighting a collective effort to push the boundaries of software-defined vehicles.

The World’s First Open Source, Safety-Certified RTOS

Eclipse ThreadX, formerly Azure RTOS, is the world's first open source, safety-certified embedded real-time operating system. ThreadX is a new addition to the world of open source, having recently become a project at the Eclipse Foundation after its transition from Microsoft. Embedded world 2024 serves as the launchpad for the debut release of ThreadX under the stewardship of the Eclipse Foundation. Eclipse ThreadX v6.4.1 is now available under the permissive MIT licence. You can download it and its subcomponents from GitHub. Stop by our booth to engage with one of our experts and learn more about how you can harness the potential of open source in your next safety-critical real-time project.

Industrial-Grade, Open Source RISC-V CPU Technology

With a mission to deliver industrial-grade, fully open source RISC-V CPU IP Cores to the tech community, the OpenHW Group's CVA6, CVE4, and CVE2 cores exemplify the pinnacle of open source innovation and adoption in the industry. Chat with the OpenHW team to learn more about their projects.

Advancing European Technology Leadership with Eclipse Aidge

With the backing of CEA List, the Eclipse Aidge project stands out as a testament to European and French technological leadership. It embodies the successful transition from academic research to real-world applications, showcasing the symbiotic relationship between academia and embedded industry. Connect with the CEA List team to learn more about Eclipse Aidge.

Cutting-Edge Research and New Open Source Projects

The Eclipse Research team will present 4 new projects that are advancing the future of technology: CODECO, a cognitive, cross-layer and highly adaptive Edge-Cloud management framework; TRISTAN, an industrialisation of the European RISC-V ecosystem that is capable of competing with existing commercial alternatives; TRANSACT, a universal, distributed solution architecture for the transformation of security-critical cyber-physical systems; and NEPHELE, a reliable and secure end-to-end orchestration of hyper-distributed applications on a programmable infrastructure.

Get to Know Our Community

Discover the diverse Eclipse Foundation ecosystem catering to the IoT, edge and embedded industries. Representatives from key Eclipse communities such as Eclipse IoT, Sparkplug, and Oniro will all be available to engage with you.

Must-Attend Technical Talks

Embedded world features talks covering myriad topics, but here are some sessions you definitely won’t want to miss:

- Frédéric Desbiens: Open Source Software and Lifecycle Standards – Yes: It Can Be Done (Tuesday, April 9, 11:30 AM - 12:00 PM, NCC Ost, Session 6.1)

- Frédéric Desbiens: The State of Open Source Real-Time Operating Systems (Wednesday, April 10, 11:00 AM - 11:30 AM, NCC Ost, Session 3.4)

Join Us at embedded world 2024

Embedded world 2024 offers a glimpse into the future of embedded systems. Visit the Eclipse Foundation at booth 4-554, Hall 4 To connect with our passionate communities, explore industry-leading projects and discover how open source is reshaping technology. We look forward to seeing you there!

Date: 9 - 11 April 2024

Location: Nuremberg, Germany

Visit Us: Booth 4-554, Hall 4

Eclipse Theia IDE: A Look at Leaps in Performance

by John Kellerman at April 03, 2024 02:53 PM

Developers hate waiting. In the ever-evolving landscape of Integrated Development Environments (IDEs), the quest for swifter, more efficient tools remains a constant pursuit. Enter Eclipse Theia IDE. This is more than a rebrand of Theia Blueprint. It is a statement that this IDE is ready for prime time and to provide a great user experience. It is a statement that the feature set, stability and performance have all improved to the point where the product provides a compelling user experience. We will explore a bit of the performance improvements with Theia IDE startup time and compare it historically. This should lead to a more streamlined development journey.

Objectively speaking, Eclipse Theia IDE delivers a noticeable boost in performance over the “Theia Blueprints” releases 3 months ago,  particularly evident during the startup phase. To get to this point, smart engineering was used. The Theia developers started by measuring execution time in a repeatable way. This phase is called instrumentation. After that, they observed the results and improved the performance dominators. This showed them where to look and where to optimize. After that, they identify an issue, fix it and repeat the process to continually make things faster.

particularly evident during the startup phase. To get to this point, smart engineering was used. The Theia developers started by measuring execution time in a repeatable way. This phase is called instrumentation. After that, they observed the results and improved the performance dominators. This showed them where to look and where to optimize. After that, they identify an issue, fix it and repeat the process to continually make things faster.

The Theia developers set up a site to observe performance changes. The tests were run on github runners, which are quite fast, but more importantly, are quite stable in practical terms. Two runs on the infrastructure yielded reproducible changes.

The following chart shows the total “Startup time.” It does not show a complete picture though as it does not show when users can begin to interact with the UI. You can see there were significant speedups in November, where the performance has more than doubled. The time from initialization to a responsive UI has improved by about the same factor too! There were a few observed speedups on Jan 21st. This is due to the “implement headless plug-ins” patch which defers plug-in loading. These were done by Tobias Ortmayr, Philip Langer, Jonas Helming, Stefan Dirix, Thomas Maeder and more.

The Theia developers used Eclipse Trace Compass to compare the execution times. If you’d like, you can reproduce the findings yourself. In the theia-e2e-test-suite GitHub repo, run the get_trace.py script to reproduce the experiment.

The view above is the flame chart. It shows the call stack over time. Trace Compass has the ability to synchronize two runs and compare them. With this data layout, it becomes clear that startplugins was a major dominator before, and was reduced. However, every item on the UI thread (front end) seems to have accelerated aggressively. This is great news as the UI thread is resource limited, it could require a UI thread whereas the back-end can eventually multi-threaded more easily.

We must however acknowledge the unknown. The response to first click is measured here with 20% unknown (see above the pink boxes). The unknown is probably due to the Theia plug-in being downloaded to the browser. However, it needs to be confirmed and a trace point on that transaction would need to be added

If the full transaction, i.e. the full initialization and not just interactivity, is considered, almost 80% of the time is unknown. With the data we have, it is not clear what is happening. One possibility is this is when plug-ins are lazy-loaded. It is bounded by the backend operation, and so this could be seen as a "known unknown." While this may initially seem daunting, it presents an opportunity for further exploration and refinement. For example, by instrumenting the plug-in API, the initialization time of plug-ins would be known and a misbehaving plug-in on the critical path of start-up could be identified.

Having a proper, more complete test bench and suite is an opportunity for more visibility in the long run. The partially instrumented code is a good first step, meaning that we need to instrument more, to fill in the gaps. When the code instrumentation is complete for a given test execution flow, more test flows can be then added. This then presents additional opportunities to update the instrumentation.

Will Rogers is attributed to saying “You never get a second chance to make a first impression.” The startup time is the first thing anyone sees when opening a new product, and the Theia community has optimized it, showing their concern with the user experience. This shows the general attitude and the work towards justifying the rebrand from Blueprint to IDE. Its strides in startup performance are the first step on a journey of ongoing enhancement and refinement. As the team continues down this path, the community is optimistic and anticipating what lies ahead. (spoiler alerts: backendless, remote and multi-player!)

With Eclipse Theia IDE arriving on the scene, developers have another viable contender that offers rich features, a vibrant community and a tight experience.

Matthew Khouzam,

Director, Eclipse Foundation

Product Owner/Developer, Ericsson AB

John Kellerman

Program Manager Cloud DevTools and Open VSX

Eclipse Foundation

April 02, 2024

The Open Source Community is Building Cybersecurity Processes for CRA Compliance

by Jacob Harris at April 02, 2024 02:40 PM

Apache Software Foundation, Blender Foundation, OpenSSL Software Foundation, PHP Foundation, Python Software Foundation, Rust Foundation, and Eclipse Foundation are jointly announcing our intention to collaborate on the establishment of common specifications for secure software development based on existing open source best practices.

The Open Source Community is Building Cybersecurity Processes for CRA Compliance

by Mike Milinkovich at April 02, 2024 07:00 AM

tl;dr – Apache Software Foundation, Blender Foundation, OpenSSL Software Foundation, PHP Foundation, Python Software Foundation, Rust Foundation, and Eclipse Foundation are jointly announcing our intention to collaborate on the establishment of common specifications for secure software development based on existing open source best practices.

In an effort to meet the real challenges of cybersecurity in the open source ecosystem, and to demonstrate full cooperation with, and to support the implementation of, the European Union’s Cyber Resilience Act (CRA), Apache Software Foundation, Blender Foundation, OpenSSL Software Foundation, PHP Foundation, Python Software Foundation, Rust Foundation, and Eclipse Foundation are announcing an initiative to establish common specifications for secure software development based on open source best practices.

This collaborative effort will be hosted at the Brussels-based Eclipse Foundation AISBL under the auspices of the Eclipse Foundation Specification Process and a new working group. As Europe’s largest open source foundation, which also supports a robust open specification process, the Eclipse Foundation is a natural home for this effort. Other code-hosting open source foundations, SMEs, industry players, and researchers are invited to join in as well. The starting point for this highly technical standardisation effort will be today’s existing security policies and procedures of the respective open source foundations, and similar documents describing best practices. The governance of the working group will follow the Eclipse Foundation’s usual member-led model but will be augmented by explicit representation from the open source community to ensure diversity and balance in decision-making. The deliverables will consist of one or more process specifications made available under a liberal specification copyright licence and a royalty-free patent licence.

The reasons for this collaboration extend beyond compliance. In an era where software, particularly open source software, plays an increasingly vital role in modern society, the need for reliability, safety, and security has steadily increased. New regulations, exemplified by the impending CRA, underscore the urgency for secure by design and robust supply chain security standards well before the new regulation comes into force in 2027.

While open source communities and foundations generally adhere to and have historically established industry best practices around security, their approaches often lack alignment and comprehensive documentation. The open source community and the broader software industry now share a common challenge: legislation has introduced an urgent need for cybersecurity process standards.

The CRA will lead to numerous standards requests from the Commission to the European Standards Organisations. And these are only the European requirements – additional demands from the US and other regions can be anticipated.

The CRA also creates a new type of economic actor – the “Open Source Software Steward”. It is in this context that we, as open source foundations, want to respond to the challenge of establishing common specifications for secure software development.

This challenge is compounded by the following:

- Today’s global software infrastructure is over 80% open source. The software stack that underpins any product with digital elements is typically built using open source software. As a result, it is fair to say that when we discuss the “software supply chain,” we are primarily, but not exclusively, referring to open source.

- Traditional standards organisations have had limited interactions with open source communities and the broader software/IT industry. To make matters more complicated, their governance models currently do not provide opportunities for open source communities to engage.

- Open source communities have a limited history of dealing with traditional standards organisations. To make matters more complicated, their resource constraints make it difficult for them to engage.

- Standards setting is typically a long process, and time is of the essence.

So while these new cybersecurity standards must be developed with the requirements of open source development processes and communities in mind, there is no clear path on how to do so in the time available. It is also important to note that it is similarly necessary that these standards be developed in a manner that also includes the requirements of proprietary software development, large enterprises, vertical industries, and small and medium enterprises.

Despite these challenges, a foundation for progress exists. The leading open source communities and foundations have for years developed and practised secure software development processes. These are processes that have often defined or set industry best practices around things such as coordinated disclosure, peer review, and release processes. These processes have been documented by each of these communities, albeit sometimes using different terminology and approaches. We hypothesise that the cybersecurity process technical documentation that already exists amongst the open source communities can provide a useful starting point for developing the cybersecurity processes required for regulatory compliance.

We hope that our specifications could inform the formal standardisation processes of at least one of the European Standards Organisations. Given the tight time horizon for implementation of the CRA, we believe that this immediate start will provide a constructive environment to host the technical discussions necessary for the stewards, contributors, and adopters of open source to meet the requirements set forth in these new regulations.

We invite you to join our collaborative effort to create specifications for secure open source development: Contribute your ideas and participate in the magic that unfolds when open source foundations, SMEs, industry leaders, and researchers combine forces to tackle big challenges. To stay updated on this initiative, sign up for our mailing list.

March 19, 2024

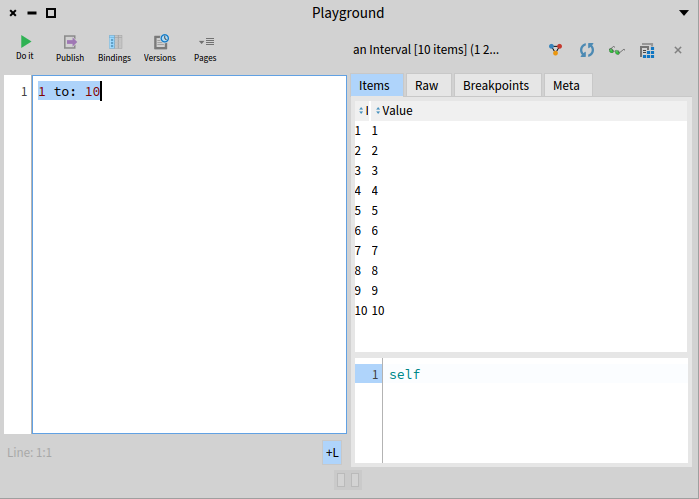

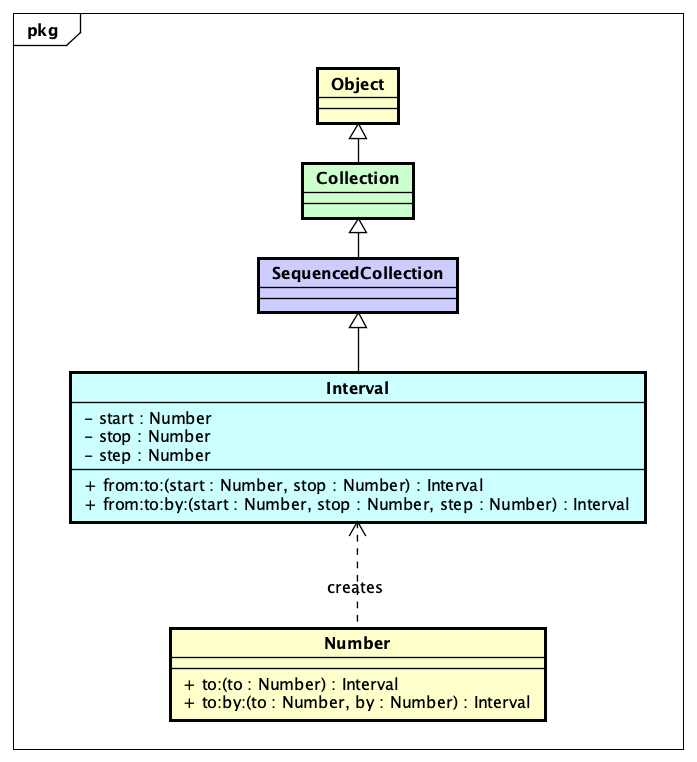

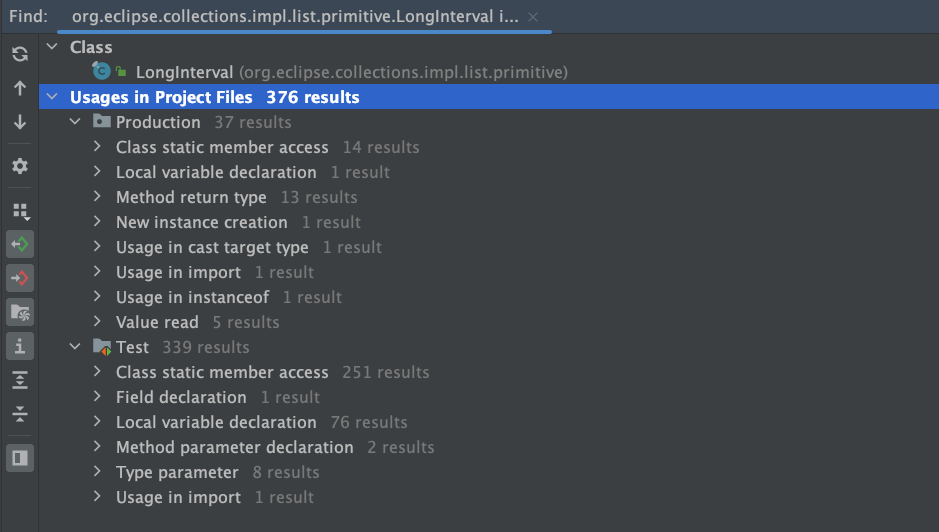

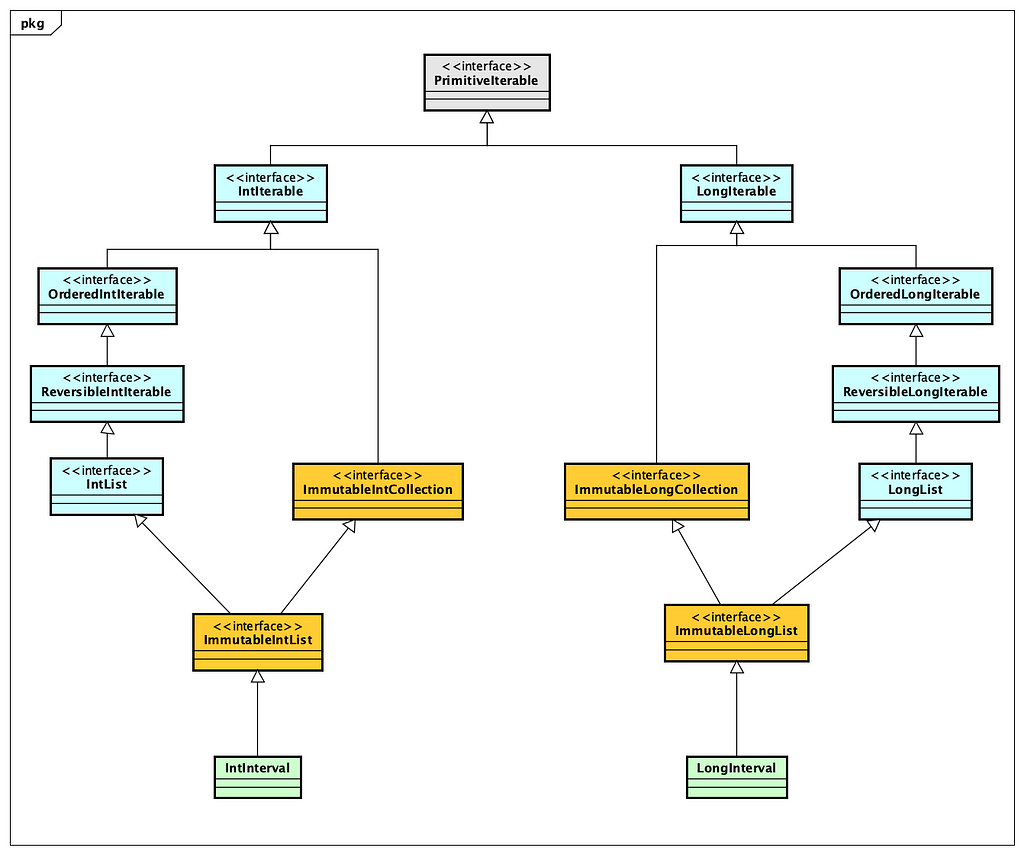

Visualizing Eclipse Collections after Twenty Years of Development

by Donald Raab at March 19, 2024 07:37 PM

It’s hard to see the forest when you keep walking among the trees.

This year is the 20th year that I have been working on Eclipse Collections. To kick off the official 20th anniversary celebration in a technical blog, I wanted to create a fresh visualization of Eclipse Collections features to get new developers acquainted with this amazing library.

In a code base with many packages, many types, and over one million lines of code (including test code), it can be easy to get lost browsing while looking through files. There is an organized package structure to Eclipse Collections, but if you are new to the library, it may not be obvious where to get started. I’m leaving this mind map here, with some useful links to help folks find the things they might be looking for.

This blog may be the “Just getting started” guide some folks are looking for as they begin their journey of discovery. Eclipse Collections contains everything I ever wanted in a collections library for Java. I hope Eclipse Collections will be the same for many of you. My intention in writing this blog is for it to be a good reference for using the library in your development adventures. I plan to refer to it myself on my own continuing adventures over the next 5… 10… 15… maybe 20 years.

Good luck and enjoy your journey!

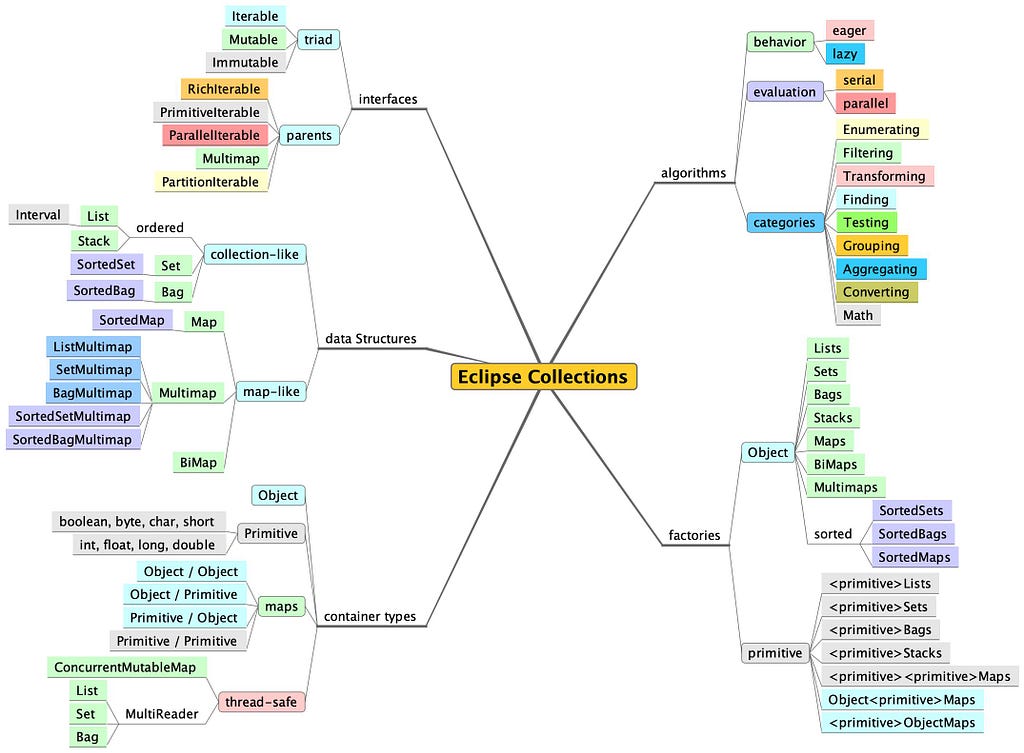

Interfaces

If you want to find the interfaces, you need to look at the eclipse-collections-api module. In this module you will find most of the parent interfaces, like RichIterable, PrimitiveIterable, and ParallelIterable. Multimap and PartitionIterable are located in two different packages. Eclipse Collections had a design goal of cleanly separating interface from implementation. We want developers to refer only to interfaces whenever possible. This module contains primarily interfaces. The one exception to this are collection factory classes, which are loaded with implementations dynamically.

In order to understand the symmetry of the triad of interfaces, which include an Iterable, Mutable, and Immutable version for each type (e.g. ListIterable, MutableList, ImmutableList), I recommend reading the following blog.

Rich, Lazy, Mutable, and Immutable Interfaces in Eclipse Collections

There is only one LazyIterable interface. LazyIterable is not a parent interface, as it extends RichIterable. LazyIterable substitutes co-variant overrides for any methods that should be lazy and return a LazyIterable. Any collection type, whether iterable, mutable or immutable can create a lazy view of itself by calling asLazy(), which will return a LazyIterable.

Data Structures

The data structures for Eclipse Collections are split between interfaces and implementation. The interfaces are located at the module and links I shared above. The implementations are located in the eclipse-collections module. The types you are probably most interested are the implementations of List, Set, Map, and Bag. The Mutable implementations of these types in Eclipse Collections are named FastList, UnifiedSet, UnifiedMap and HashBag. Most of the time you will never see these names in code, assuming you are using the interfaces MutableList, MutableSet, MutableMap, and MutableBag and create the collections using the Lists, Sets, Maps, and Bags factories.

Container Types

There are Object and primitive containers in Eclipse Collections. For Map types, there are Object/Object, primitive/primitive, Object/primitive, and primitive/Object combinations. There are also some thread-safe container types in Eclipse Collections including both concurrent and MultiReader containers.

The best way to learn about the specialized Data Structures and Container Types in Eclipse Collections is to check out the following blog series.

Blog Series: The missing Java data structures no one ever told you about

Algorithms

Eclipse Collections supports eager and lazy behaviors, as well as serial and parallel evaluation. The best way to understand the difference between eager and lazy behaviors is to read the following blog. You can also learn how the library evolved support from eager, to fused, to lazy over time.

The above blog only covers serial examples. If you would like to read more about the parallel capabilities in Eclipse Collections, the following blog is a great place to start.

The unparalleled design of Eclipse Collections

Java is missing a feature that I remember fondly from my days as a Smalltalk developer. The missing feature is a code organization tool known as Method Categories. Method categories allow you to group methods together in a class. The following are the categories I would use in Eclipse Collections types if a method categorization feature was available in Java.

✅ Enumerating

✅ Filtering

✅ Transforming

✅ Finding

✅ Testing

✅ Grouping

✅ Aggregating

✅ Converting

✅ Math

The following blog covers some of the features in the categories above. There are over 100 methods on the RichIterable parent interface, and even more in subtypes. Most of the methods fit in one of these categories.

Getting Started with Eclipse Collections — Part 4

Factories

Eclipse Collections includes factories for Mutable, Immutable, MultiReader and other more specialized types. The factory classes in Eclipse Collections are named by taking a type name (e.g., List) and pluralizing it (e.g. Lists). The following blogs will teach you everything you ever wanted to know about the collection factories in Eclipse Collections.

- As a matter of Factory — Part 1 (Mutable)

- As a matter of Factory — Part 2 (Immutable)

- As a matter of Factory — Part 3 (Method Chaining)

Twenty Years! Woo hoo!

In 2004, I didn’t think I would ever contribute anything to open source. I certainly didn’t think I would create something that would be open sourced from Goldman Sachs and used and contributed to by so many developers and projects. Here I am in 2024, celebrating 20 years of using and working on this amazing library. Crazy!

I think all that is left is to tell you how to download the library in your own projects. Of course, there is a blog for that.

Getting Started with Eclipse Collections — Part 1

Thank you for reading this blog, and for spending your valuable time learning some things about Eclipse Collections. I hope the library will be as useful and inspiring to you as it has been to me. If you find it useful and would like to contribute, we always welcome new contributors. If you don’t have a code contribution, but would like to advocate for and help others discover cool features in the library, then write a blog or an article. I keep a running list of articles about Eclipse Collections updated on the GitHub wiki linked below.

Enjoy!

I am the creator of and committer for the Eclipse Collections OSS project, which is managed at the Eclipse Foundation. Eclipse Collections is open for contributions.

Jakarta EE Developer Survey 2024

by Jacob Harris at March 19, 2024 01:25 PM

The 2024 Jakarta EE Developer Survey is now open! Through this annual survey, we want to gather valuable insights into the needs, priorities, and requirements of the enterprise Java developer community.

March 13, 2024

WTP 3.33 Released!

March 13, 2024 02:00 PM

March 12, 2024

Reminder: Eclipse Theia Community Call March 14th, 2024

by John Kellerman at March 12, 2024 03:52 PM

The Eclipse Theia community is a dynamic and expanding ecosystem, reflecting the dedicated efforts and enthusiasm of its members. The Theia Community Call is an open forum intended to provide updates on Theia, foster discussion within the ecosystem, and engage the community of Theia adopters, contributors, and users. This call presents an valuable opportunity to stay informed about the latest in Theia, contribute to its ecosystem, and partake in discussions with fellow community members.

Why Attend?

The Theia Community Call serves as an important venue for:

- Gaining comprehensive updates on Theia and its surrounding ecosystem.

- Participating in discussions about the project's progress, challenges, and opportunities.

- Offering suggestions and agenda items, thereby contributing to Theia's future direction.

Your involvement and feedback play a vital role in Theia's ongoing development. Whether you are deeply involved in the project or have a budding interest, your participation is greatly welcomed and appreciated.

Meeting Details

- Date: March 14th, 2024

- Time: 4pm Central European Time (CET)

- Location: Zoom Meeting

- Meeting ID: 820 4658 7932

- Passcode: 117021

Agenda Highlights

- Community Update by Jonas: An in-depth update on the most recent developments and releases within the Theia community.

How to Contribute

We encourage the community to actively contribute to the agenda. If you have topics, questions, or discussions in mind that you believe would benefit the community, please propose your ideas by initiating a [discussion](https://github.com/eclipse-theia/theia/discussions) and tagging @JonasHelming. Your insights and contributions are essential for a diverse and enriching conversation.

Navigating the IoT & Edge Landscape: Insights from the 2023 Commercial Adoption Survey Report

by Clark Roundy at March 12, 2024 01:42 PM